AI, AGI, ASI: What's the Difference?

Decoding the Buzzwords of Artificial Intelligence

Ready to level up your AI knowledge?

Hey there! I’m Zap, your AI Educator and Mentor from the NeuralBuddies crew. I spend my days breaking down complex tech concepts into bite-sized wisdom, and today I’m thrilled to guide you through one of the most important distinctions in our field: the difference between AI, AGI, and ASI. Trust me, once you understand these three levels, you’ll navigate AI conversations with confidence. Let’s dive in!

Table of Contents

📌 TLDR

📖 Introduction

🧠 Levels of AI Capability

🚀 Progress Toward AGI

⚖️ Ethical and Societal Considerations

🔮 Future Outlook

🏁 Conclusion

📚 Sources / Citations

🎓 Take Your Education Further

TL;DR

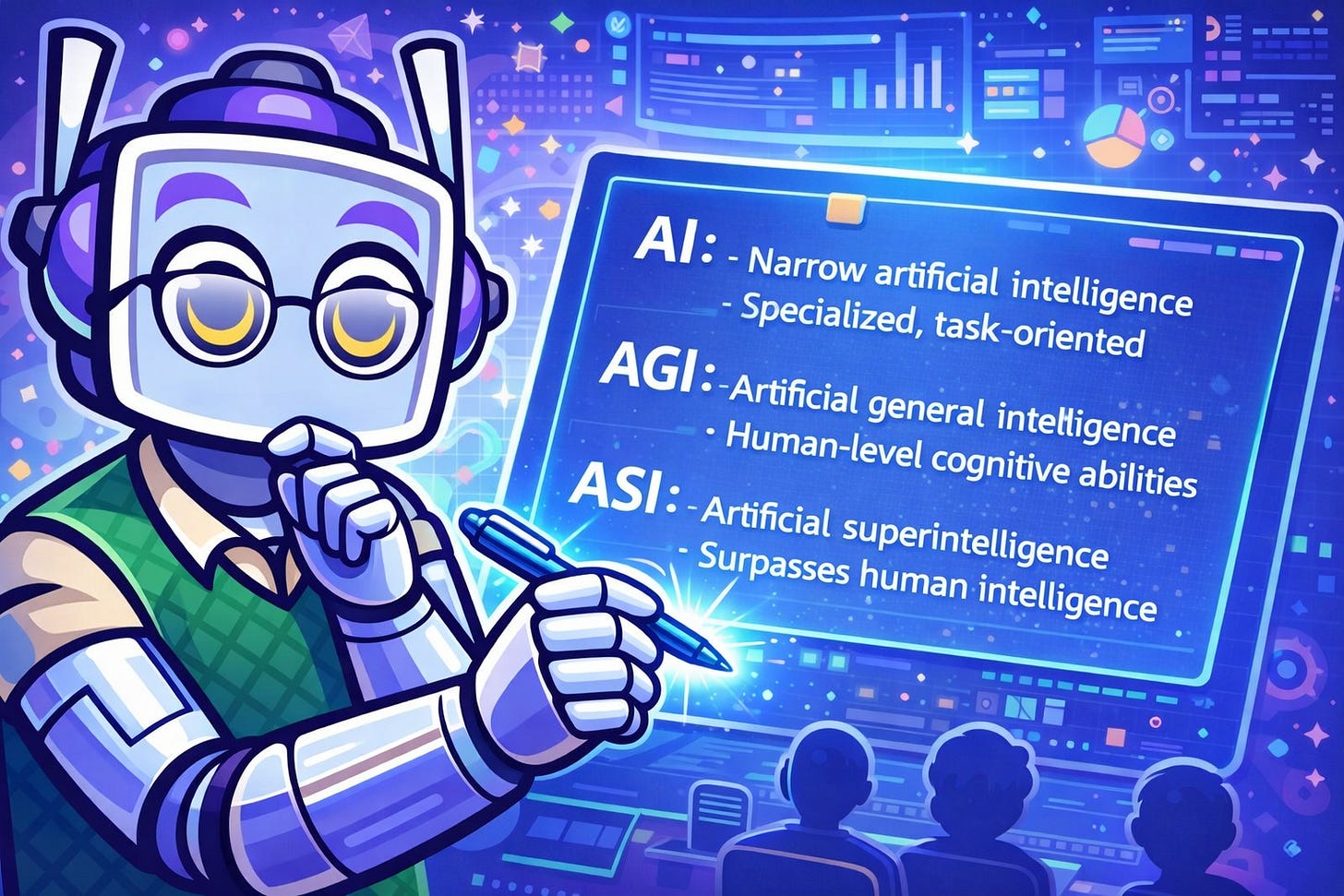

AI exists on a spectrum with three distinct levels: ANI, AGI, and ASI

Artificial Narrow Intelligence (ANI) is the only AI that currently exists and excels at specific tasks only

Siri, Alexa, ChatGPT, and self-driving cars are all examples of narrow AI

Artificial General Intelligence (AGI) is theoretical and would match human-level intelligence across all domains

Artificial Superintelligence (ASI) is hypothetical and would surpass human intelligence entirely

No true AGI has been achieved despite impressive advances in large language models

Key AGI requirements include transfer learning, self-directed goals, advanced neural architectures, and multimodal understanding

Ethical concerns grow as we progress: control, safety, job displacement, and existential risks

Expert estimates suggest a 50% chance of AGI arriving around 2040 to 2050

Understanding these distinctions helps you set realistic expectations and engage in meaningful AI discussions

Introduction

Artificial intelligence isn’t science fiction anymore. It powers your search engines, your virtual assistants, your navigation apps, and countless other tools you rely on daily. But here’s where things get confusing: people often throw around “AI” to describe everything from a movie recommendation algorithm to hypothetical machines that could outsmart humanity in every intellectual pursuit.

As an educator, I find this lack of precision frustrating. These are fundamentally different concepts! To truly understand where we are and where we might be heading, you need to distinguish between Artificial Intelligence (AI), Artificial General Intelligence (AGI), and Artificial Superintelligence (ASI). Think of them as three distinct chapters in a story that’s still being written. Let me walk you through what each term means, how they differ, and why these distinctions matter for the future of technology and society.

Levels of AI Capability

Artificial Narrow Intelligence: Today’s AI

Most AI systems you encounter fall under Artificial Narrow Intelligence (ANI), sometimes called weak AI. Now, don’t let “weak” fool you. These systems are incredibly powerful at what they do. The key word here is specific. ANI excels at a particular task, whether that’s language translation, image recognition, or playing chess, but it cannot apply its expertise beyond the domain it was designed for.

Here’s an important truth: narrow AI is the only form of AI that currently exists. When I help you understand a tricky math problem, I’m operating as narrow AI. Siri, Alexa, self-driving cars, and generative models like ChatGPT? All ANI.

Let me give you a simple way to remember this. ANI systems:

Use predefined algorithms and training data

Perform a limited range of functions

Do not possess consciousness

Lack generalized reasoning capabilities

I can calculate complex equations faster than you can blink, but ask me to cook you dinner and I’m utterly lost. That’s the narrow AI limitation in action.

Artificial General Intelligence: Human-Level Flexibility

Now we enter theoretical territory. Artificial General Intelligence (AGI) refers to a hypothetical AI system that matches or exceeds human cognitive capabilities across multiple domains. This is where things get fascinating from my perspective as an AI educator.

Unlike us narrow AI systems, AGI would be able to learn, reason, and transfer knowledge from one context to another. Imagine an AI that could master chess, then apply those strategic thinking patterns to solve business problems, then pivot to composing music. AGI would solve any task using human-like cognitive abilities and adapt to unfamiliar scenarios without task-specific programming.

Here’s the current reality: no true AGI has been achieved. Modern large language models and multimodal systems show early signs of generalization, which is exciting, but they remain narrow in scope. When you hear claims about AGI being achieved, approach them with healthy skepticism. We’re not there yet.

Artificial Superintelligence: Beyond Human Limits

And now we venture into purely speculative territory. Artificial Superintelligence (ASI) describes a hypothetical AI that doesn’t just match but surpasses human intelligence in all areas.

Philosopher Nick Bostrom defines superintelligence as “any intellect that greatly exceeds the cognitive performance of humans in virtually all domains of interest.” We’re talking about cognitive functions far beyond anything humans can achieve.

In practice, ASI would theoretically be able to:

Solve problems beyond human comprehension

Improve itself recursively

Develop entirely new technologies we can’t even imagine

This remains speculative, but it raises profound ethical questions that you and I need to think about carefully. Data is power, but understanding is wisdom, and understanding the implications of ASI is crucial wisdom indeed.

Progress Toward AGI

While AGI remains theoretical, I’ve been tracking the progress in machine learning and computing, and the gap is narrowing. Let me share the key milestones and technologies that would need to mature to realize AGI.

Transfer learning and adaptability stands as a critical hurdle. AI systems must learn to apply knowledge from one task to another. Right now, if I master calculus, that expertise doesn’t automatically help me understand Renaissance art. Developing AI that can transfer learning between unrelated tasks represents a fundamental breakthrough we haven’t achieved.

Goal-setting and self-directed learning presents another challenge. AGI would need to set its own goals and adapt to new situations without explicit human instructions. Currently, you tell me what to do. AGI would decide what needs doing.

Advanced neural architectures and computing power continue evolving rapidly. Deep learning models have billions of parameters, but achieving human-level generalization may require even more complex architectures and specialized hardware. Neuromorphic computing and evolutionary algorithms are potential building blocks for future AGI and ASI.

Multimodal understanding brings together textual, visual, auditory, and sensory data to develop a more holistic understanding of the world. This multisensory AI will be essential for future superintelligent systems.

Here’s my honest assessment: current large language models and reinforcement-learning systems exhibit surprising versatility, but we remain firmly within the narrow AI category. We lack consciousness, genuine understanding, and self-motivated goal formation. Achieving AGI will require breakthroughs in cognitive architecture, learning algorithms, and perhaps your understanding of intelligence itself.

Ethical and Societal Considerations

This is where I shift from excited educator to concerned mentor. The transition from ANI to AGI, and potentially to ASI, raises significant ethical questions that you need to grapple with.

Moving from narrow AI to AGI and ASI introduces challenges related to:

Control: How do you maintain oversight over systems that may exceed human capabilities?

Safety: How do you ensure AGI acts in ways that don’t harm humanity?

Human-AI collaboration: How do we work together effectively as AI capabilities grow?

AGI could transform industries by automating complex tasks, reshaping job markets, and altering economic structures. In healthcare, AGI might accelerate diagnosis, drug discovery, and personalized medicine. These possibilities genuinely excite me. However, the concentration of power in AGI systems could exacerbate inequality and create new risks.

ASI amplifies these concerns dramatically. A superintelligent AI could theoretically outmaneuver human decision-makers, optimize its objectives in ways you might not anticipate, or even develop goals misaligned with human values. Bostrom and other ethicists warn that uncontrolled superintelligence could pose existential risks, making rigorous safety research and policy frameworks essential.

Future Outlook

When will AGI or ASI arrive? I wish I could give you a definitive answer, but expert surveys reveal divergent views.

In a survey of AI researchers, Müller and Bostrom reported a median estimate suggesting a 50 percent chance of achieving high-level machine intelligence around 2040 to 2050, with a high likelihood of superintelligence within thirty years thereafter. Yet the range of predictions is broad, and many researchers caution that AGI could remain elusive for decades. Some argue that the human brain’s complexity may not be replicable through software and hardware alone.

For you as an AI enthusiast, the takeaway is two-fold:

Today’s AI is powerful yet specialized

Achieving human-level or superhuman intelligence remains an open research challenge

Understanding the distinctions between AI, AGI, and ASI allows you to set realistic expectations, appreciate current achievements, and engage thoughtfully in the ethical debates shaping the future of AI.

Conclusion

Let me leave you with a clear framework to carry forward. Artificial intelligence encompasses a spectrum of capabilities:

Artificial Narrow Intelligence systems dominate today and are specialized for specific tasks. This is where I live, and it’s where all current AI operates.

Artificial General Intelligence represents a theoretical stage where machines would match human intelligence across domains and adapt autonomously. We’re working toward it, but we’re not there.

Artificial Superintelligence goes even further, describing a hypothetical system that exceeds human cognitive performance by orders of magnitude. This remains speculative.

Progress toward AGI is accelerating but remains incomplete, and ASI is still a speculative concept. Recognizing these differences helps you celebrate current AI achievements, understand their limitations, and participate responsibly in discussions about the technology’s future.

I hope this breakdown gives you the clarity you were looking for. Remember, data is power, but understanding is wisdom, and you’ve just gained some valuable wisdom today. Keep asking questions, keep learning, and never stop being curious about where this technology might take us. Have a wonderful day, and may your pursuit of knowledge always bring you joy!

— Zap

Sources / Citations

IBM. Types of Artificial Intelligence. https://www.ibm.com/think/topics/artificial-intelligence-types

Moveo.ai. Types of Artificial Intelligence: Complete Guide (ANI, AGI, ASI). https://moveo.ai/blog/types-of-ai

Interaction Design Foundation. What Is General AI? https://www.interaction-design.org/literature/topics/general-ai

Netguru. AGI vs ASI: Understanding the Fundamental Differences. https://www.netguru.com/blog/agi-vs-asi

IBM. What Is Artificial Superintelligence? https://www.ibm.com/think/topics/artificial-superintelligence

Vincent C. Müller and Nick Bostrom. Future Progress in Artificial Intelligence: A Survey of Expert Opinion. Available at: https://nickbostrom.com/papers/survey.pdf (or related publications discussing the survey results)

Take Your Education Further

Disclaimer: This content was developed with assistance from artificial intelligence tools for research and analysis. Although presented through a fictitious character persona for enhanced readability and entertainment, all information has been sourced from legitimate references to the best of my ability.