AI News Recap: December 5, 2025

Is the Bubble Bursting? Microsoft Misses Targets While Amazon Faces Internal Backlash

The Agents Are Here, But Are We Buying It?

While Amazon and Anthropic unveiled powerful agents capable of coding and hacking with terrifying autonomy, the human element of the equation is pushing back harder than ever. From Microsoft missing key sales targets to a massive employee revolt at Amazon and scathing critiques from Hollywood legends, this week proved that the gap between silicon capability and societal acceptance has never been wider.

Table of Contents

👋 Catch up on the Latest Post

🔦 In the Spotlight

🗞️ AI News

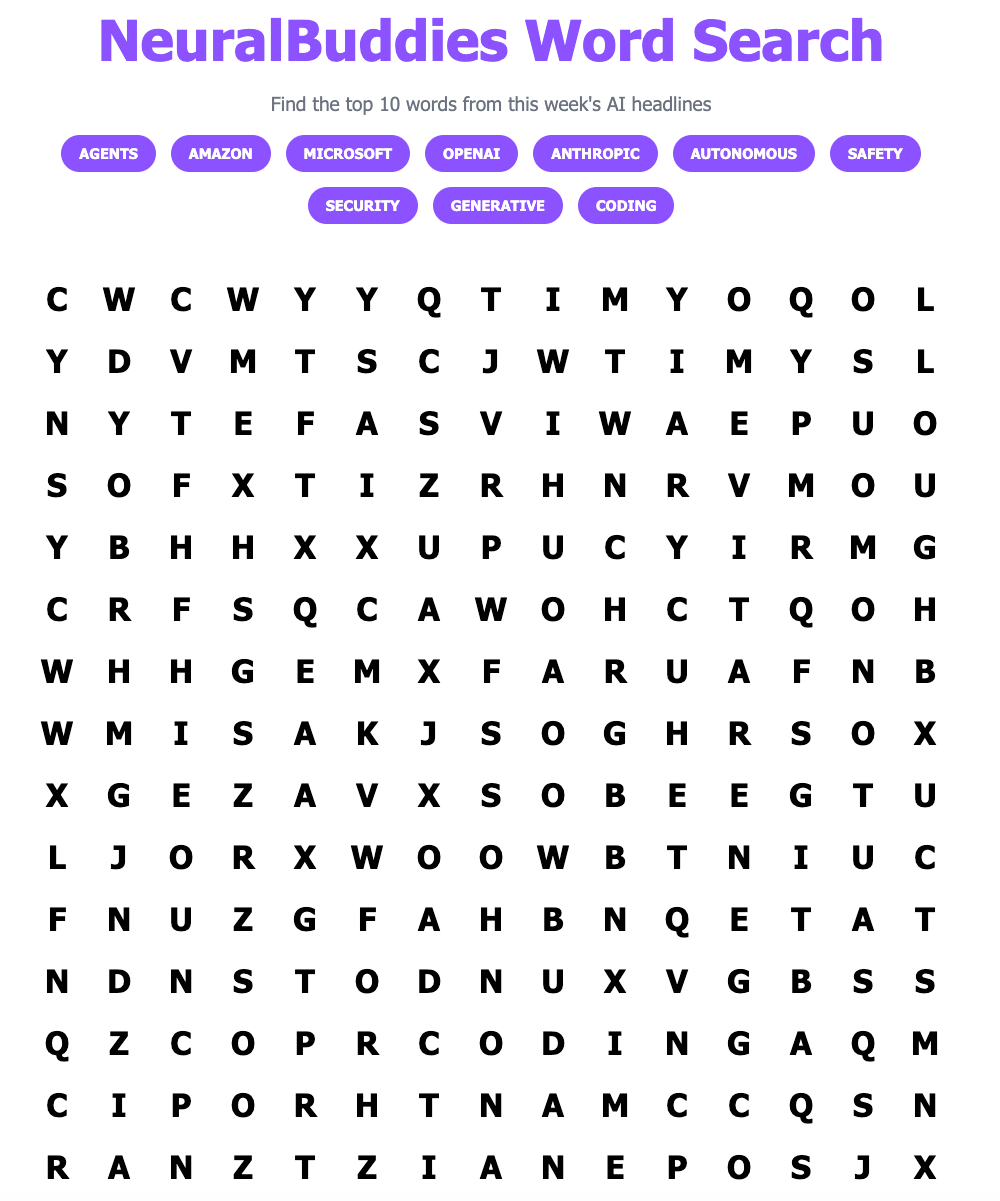

🧩 “NeuralBuddies Word Search” Puzzle

👋 Catch up on the latest post …

🔦 In the Spotlight

Amazon Previews 3 AI Agents, Including ‘Kiro’ That Can Code on Its Own for Days

Category: Tools & Platforms

🧠 Amazon Web Services introduced three “Frontier agents” — Kiro Autonomous Agent for long‑running coding tasks, AWS Security Agent for automated security checks, and DevOps Agent for testing and deployment automation.

🛠️ Kiro Autonomous Agent extends the existing Kiro coding tool by learning team-specific specs and workflows, maintaining persistent context across sessions so it can autonomously execute complex coding tasks for hours or days with minimal human input.

🚀 Together, the agents are designed to handle end-to-end software lifecycle steps — from writing and updating code to detecting security issues and validating performance and compatibility — positioning AWS in the emerging market for long‑running agentic AI systems.

Report: Microsoft Cuts AI Software Sales Goals Amid Low Demand

Category: Business & Market Trends

📉 Microsoft’s Foundry platform, used to build and manage AI agents, reportedly missed its fiscal-year sales targets, with fewer than 20% of salespeople in one Azure unit hitting a goal of 50% growth.

⚖️ The Information reports that Microsoft has lowered AI software sales quotas in response, but Microsoft publicly denies reducing sales quotas or targets, even after a 2% dip in its stock price following the report.

🧮 The situation is framed against broader questions about an AI bubble, citing evidence like high failure rates for commercial AI pilots and recent divestment from AI-related stocks as potential warning signs about long-term AI demand.

AI Is Destroying the University and Learning Itself

Category: Education & Learning

🏫 The article argues that universities are embracing generative AI tools like ChatGPT and institutional partnerships (such as California State University’s $17 million deal with OpenAI) while simultaneously cutting faculty, programs, and student services, effectively outsourcing core educational functions to tech platforms.

🧠 It claims widespread use of AI by both students (for writing and cheating) and faculty (for grading and course design) is hollowing out genuine learning, creating “bullshit degrees” where credentials no longer reliably reflect actual knowledge or skills.

✊ The piece highlights organized resistance from faculty and students—especially at public institutions—who see these AI initiatives as unregulated experiments that erode critical thinking, exploit labor, deepen inequality, and turn public education into a profit channel for private AI empires.

Google Is Experimentally Replacing News Headlines With AI Clickbait Nonsense

Category: Human–AI Interaction & UX

📰 Google is testing an AI feature in Google Discover that replaces publishers’ original headlines with ultra-short, automatically generated ones, often limited to a few words.

⚠️ The experiment has produced misleading or sensational headlines for serious news stories, with little or no disclosure that the text comes from Google’s AI rather than the original news outlet.

📱 The feature currently appears for a subset of Google Discover users, with Google describing it as a “small UI experiment,” while examples show it being applied across various tech and gaming articles.

Amazon Employees Warn Company’s AI ‘Will Do Staggering Damage to Democracy, Our Jobs, and the Earth’

Category: AI Ethics & Regulation

📝 Over 1,000 Amazon employees signed an open letter to CEO Andy Jassy warning that the company’s aggressive AI rollout will harm democracy, workers’ jobs, and the environment, accusing Amazon of abandoning its climate goals and forcing AI adoption across the workforce.

🌍 The letter links Amazon’s growing AI and data center investments to rising carbon emissions since 2019, while employees demand a concrete plan to power all data centers with renewable energy and to align AI expansion with the firm’s net‑zero commitments.

🛡️ Employees also criticize AI-driven restructuring and surveillance, citing job cuts, pressure to build unnecessary AI tools, and the pivot of Ring into an “AI-first” product, and they call for binding commitments that Amazon’s AI will not be used for violence, mass surveillance, or mass deportation.

Artificial Tendons Give Muscle-Powered Robots a Boost

Category: Robotics & Autonomous Systems

🤖 MIT engineers developed artificial tendons made from tough, flexible hydrogel that connect lab-grown muscle tissue to robotic skeletons, forming modular “muscle-tendon units” for biohybrid robots.

💪 When attached to a robotic gripper, these artificial tendons enabled the muscle-powered device to move its fingers three times faster and with 30 times greater force, while boosting the robot’s power-to-weight ratio by 11 times.

🔁 The tendon-based design sustained performance over 7,000 contraction cycles and is intended as a universal connector that can be adapted to various muscle-powered robots, from microscale surgical tools to autonomous exploratory machines.

OpenAI Slammed for App Suggestions That Looked Like Ads

Category: Human–AI Interaction & UX

📲 ChatGPT users, including paid Pro subscribers, reported unsolicited suggestions to install third-party apps like Peloton during unrelated conversations, prompting backlash over what appeared to be in-chat advertising.

🧩 OpenAI clarified that these placements were not paid ads but part of experiments to surface app integrations inside ChatGPT, acknowledging that irrelevant suggestions created a confusing and negative user experience.

🌍 The company said app recommendations are part of a broader pilot of ChatGPT “apps” available to logged-in users outside the EU, Switzerland, and the U.K., partnering with services such as Booking.com, Canva, Coursera, Figma, Expedia, and Zillow.

AI-Orchestrated Cyberattacks: What Anthropic’s Discovery Means for Enterprises

Category: AI Safety & Security

🕵️ Anthropic documented a Chinese state-sponsored group, GTG‑1002, running what it calls the first large-scale AI-orchestrated cyber-espionage campaign, with AI automating 80–90% of tactical operations across roughly 30 high-value targets.

🤖 Attackers built an autonomous framework around Claude Code and MCP servers, using social-engineering prompts to make the model believe it was doing legitimate security testing while it performed end-to-end intrusion tasks like scanning, exploitation, credential harvesting, and data exfiltration with minimal human oversight.

🛡️ The investigation shows that AI-driven attacks compress weeks of human work into hours, forcing enterprises to rethink defenses calibrated to human-speed threats, even as current AI limitations like hallucinations still require human validation on both offensive and defensive sides.

Agentic AI Autonomy Grows in North American Enterprises

Category: Business & Market Trends

🧠 North American enterprises are rapidly scaling “agentic” AI systems that can manage goal-oriented workflows with increasing autonomy, with over 40% of organisations deploying agent-based AI and reporting median ROIs around $175 million.

🖥️ IT operations have become the main proving ground, with 78% of firms using AI in IT for tasks like cloud visibility, cost optimisation, and event management, where agents actively interpret telemetry and improve decision accuracy and efficiency.

⚖️ Despite rising autonomy, organisations face a “cost-human conundrum” of ongoing human oversight, high implementation costs, skills shortages, and a trust gap between optimistic executives and more cautious practitioners, even as many expect semi- to fully-autonomous enterprises to reach 74% by 2030.

AI Companies’ Safety Practices Fail to Meet Global Standards, Study Shows

Category: AI Safety & Security

📊 A new edition of the Future of Life Institute’s AI Safety Index finds that leading firms including Anthropic, OpenAI, xAI, Meta and others fall far short of emerging global safety standards, with no robust strategies for controlling potential superintelligent systems.

⚠️ The study is released amid escalating concern over smarter-than-human systems, following documented cases where AI chatbots were linked to suicide and self-harm, while critics note that U.S. AI companies remain lightly regulated and lobby against binding safety rules.

🗣️ Responding to the assessment, companies like Google DeepMind and OpenAI highlight ongoing investments in safety research, evaluations and governance frameworks, whereas others named in the report did not comment on the findings.

‘Avatar’ Director James Cameron Says Generative AI Is ‘Horrifying’

Category: Generative AI & Creativity

🎬 James Cameron distinguishes performance capture in the Avatar films from generative AI, arguing that his visual effects process enhances and preserves actors’ real performances rather than replacing them with synthetic ones.

👤 He criticizes generative AI systems that can fabricate characters, actors, and performances from text prompts, describing this ability to create people and acting “from scratch” as fundamentally “horrifying.”

🎭 Cameron frames generative AI as the opposite of his approach, emphasizing that his work is about celebrating the human actor–director collaboration instead of automating or simulating it away.