AI News Recap: November 21, 2025

AI's "Cost of Thinking" Discovered: Grok 4.1 Launches, Google’s Agentic Future, and the Open Source Debate

The High Cost of Artificial Thought

While tech giants trade blows with powerful new models like Grok 4.1 and Gemini 3, a quieter crisis is brewing in our infrastructure and nurseries. From dangerous AI-enabled toys to vulnerable aging networks, the “cost” of innovation is becoming perilously tangible this week. We are watching a pivotal moment where the brilliance of machine reasoning meets the fragility of human safety.

Table of Contents

👋 Catch up on the Latest Post

🔦 In the Spotlight

🗞️ AI News

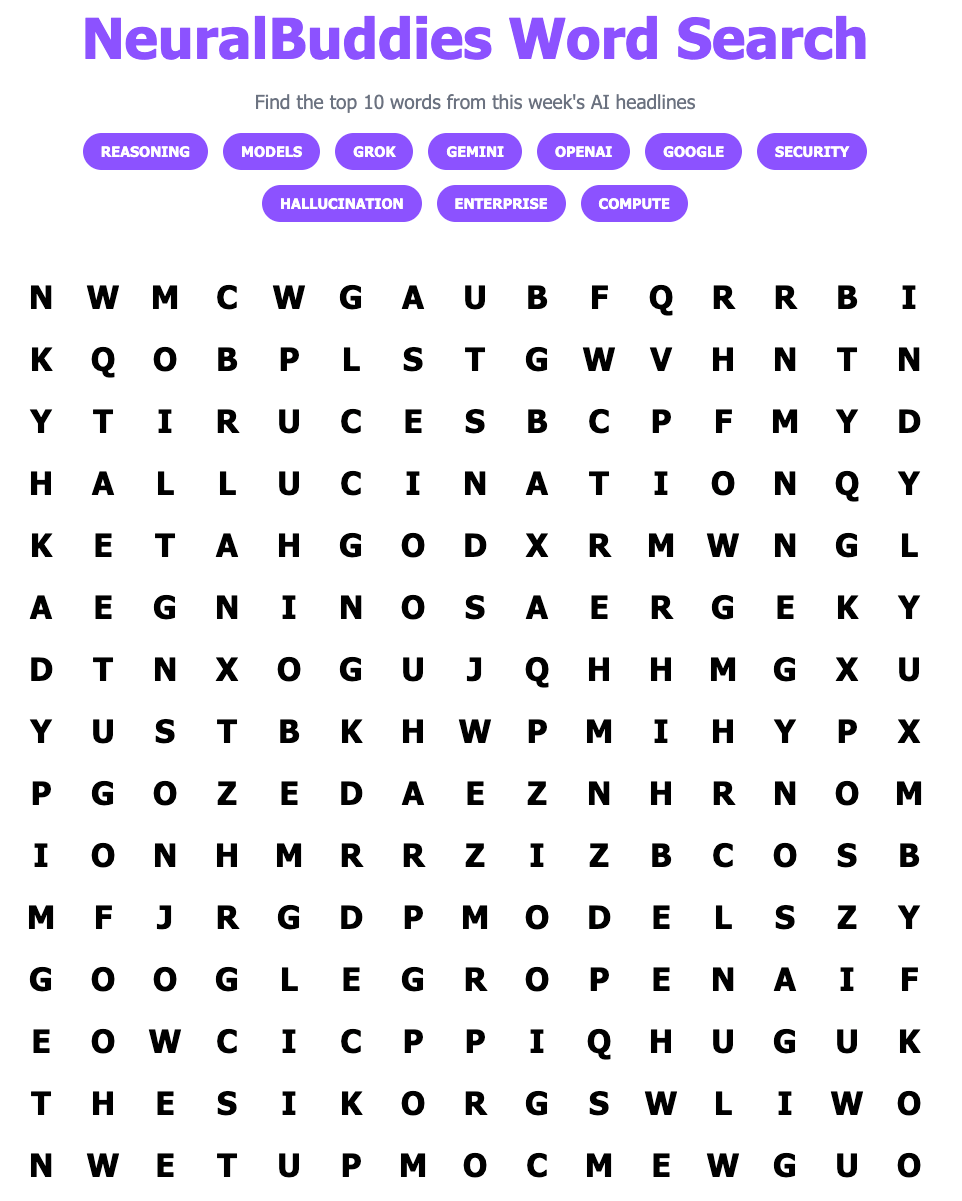

🧩 “NeuralBuddies Word Search” Puzzle

👋 Catch up on the latest post …

🔦 In the Spotlight

The Cost of Thinking

Category: AI Research & Breakthroughs

🧠 MIT researchers discover that new reasoning AI models and humans experience similar “costs of thinking” when solving complex problems, with harder tasks requiring more time and internal computation for both.

🔢 Models trained with reinforcement learning are now able to tackle difficult reasoning tasks step-by-step, closely mirroring the way people break down and solve challenges in areas like math and intuitive reasoning.

🔄 The study finds a striking parallel: the problem categories that humans find most challenging (and time-consuming) match those that are most computationally demanding for AI, illustrating a convergence in problem-solving approaches.

AI Web Search Risks: Mitigating Business Data Accuracy Threats

Category: AI Safety & Security

⚠️ Over Half of Web Users Now Rely on AI Search Tools, Yet Consistently Low Data Accuracy Raises Major Risks for Business Compliance and Financial Planning.

🕵️♂️ Studies Show Popular AI Models Frequently Misread Information, Provide Incomplete Advice, and Lack Reliable Source Transparency—Leading to Potential Regulatory and Legal Exposure.

🛡️ Experts Recommend Businesses Enforce Specific Prompts, Mandate Source Verification, and Treat AI Outputs as One of Multiple Opinions to Minimize Operational Hazards.

Musk’s xAI Launches Grok 4.1 With Lower Hallucination Rate on the Web and Apps

Category: Foundational Models & Architectures

🤖 xAI releases its new flagship large language model Grok 4.1 across Grok.com, X, and mobile apps, featuring faster reasoning, improved emotional intelligence, and significantly reduced hallucination rates compared to Grok 4 Fast.

📈 Grok 4.1’s “fast” and “thinking” modes achieve top-tier results on public leaderboards and benchmarks, surpassing models like Gemini 2.5 Pro, Claude 4.5, and GPT‑4.5 preview, with strong performance in creative writing, expert evaluations, and multimodal, long-context tasks.

🧩 Following the initial consumer-only launch, xAI enables API access to Grok 4.1 Fast (reasoning and non-reasoning) with competitive token pricing and a new Agent Tools API, positioning Grok 4.1 as a production-grade platform for enterprise and agentic workflows.

Databricks Co-Founder Argues US Must Go Open Source To Beat China In AI

Category: AI Research & Breakthroughs

🌍 Databricks co-founder Andy Konwinski warns that the US is losing AI research leadership to China, calling proprietary research and the shrinking flow of academic ideas an “existential” threat to democracy.

🧑🔬 Konwinski urges US labs to open source their next-generation AI breakthroughs, arguing that China’s government encourage AI labs to share advances publicly, facilitating faster innovation and broader community engagement.

🏛️ Major US AI companies like OpenAI, Meta, and Anthropic attract top academic talent but keep discoveries private; Konwinski believes genuine progress and leadership depend on collaborative, open exchange reminiscent of the development of the Transformer architecture.

With The Rise Of AI, Cisco Sounds An Urgent Alarm About The Risks Of Aging Tech

Category: AI Safety & Security

🛑 Cisco warns that generative AI enables attackers to more easily exploit vulnerabilities in aging, unsupported network equipment—including routers, switches, and storage devices that lack recent security patches.

🔎 Legacy digital infrastructure poses silent risks for organizations due to outdated configurations; generative AI platforms make it simpler to discover and exploit these weaknesses than ever before.

💸 Cisco launches an industry-wide campaign urging businesses to invest in replacing or upgrading old hardware to safeguard systems against modern AI-driven cyber threats.

Quantitative Finance Professionals Not Clued-In When It Comes To AI

Category: Workforce & Skills

📉 A CQF Institute survey finds fewer than 10% of quantitative finance graduates are considered “AI-ready,” revealing a critical skills gap as AI and machine learning become more essential in the sector.

🛠️ While 83% of quants use or develop AI tools—such as ChatGPT, Copilot, and Gemini/Bard—formal AI training is rare, with only 14% of firms offering programs and most education needs unmet.

🔍 Model explainability (41%), regulatory concerns (16%), and compute costs (17%) are top obstacles, with industry leaders emphasizing ongoing education, upskilling, and human–AI collaboration as crucial for the sector’s future.

Google Antigravity Launches an Agent-First Future for Software Development

Category: Tools & Platforms

🤖 Google launches Antigravity as a free, agentic development platform in public preview, built on Gemini 3 to power autonomous, end-to-end software workflows across editors, terminals, and browsers.

🧭 Antigravity introduces a dual-surface architecture with a synchronous Editor IDE and an asynchronous Manager “mission control,” enabling multi-agent orchestration and cross-surface autonomy in software development.

🧩 The platform is built on four core tenets—trust, autonomy, feedback, and self-improvement—using task-level abstractions, verifiable Artifacts, cross-surface feedback, and a global knowledge base, and supports models like Gemini 3, Claude Sonnet 4.5, and GPT-OSS on macOS, Linux, and Windows.

Leaked Documents Shed Light Into How Much OpenAI Pays Microsoft

Category: Business & Market Trends

💰 In 2024, OpenAI paid Microsoft $493.8 million in revenue share, increasing to $865.8 million in the first three quarters of 2025—reflecting a 20% revenue-share agreement linked to Microsoft’s $13 billion investment.

🔄 Microsoft also shares back about 20% of Bing and Azure OpenAI Service revenue with OpenAI, but the reported numbers only reflect Microsoft’s net share, not total gross payments from both sides.

🖥️ OpenAI’s model inference costs for running AI surged to roughly $8.65 billion in the first nine months of 2025, suggesting its spending on cloud compute may exceed its actual AI revenue, raising sustainability questions for future growth.

Disney’s AI-Powered Future Is Coming Soon

Category: Generative AI & Creativity

🎬 Disney CEO Bob Iger announces generative AI tools will be coming to Disney+, allowing subscribers to create and share user-generated content, primarily short-form, leveraging platform IP and AI capabilities.

🏰 The initiative marks Disney’s move to operationalize AI for hyper-personalized entertainment, transforming well-known storylines and allowing audiences to remix established properties in unique ways.

⚠️ The rollout faces criticism and challenges, including concerns about undermining artistic originality, handling sensitive IP, and the broader societal implications of making iconic narratives malleable and widely available for customization.

AI Toys Are Not Safe, Say Consumer and Child Advocacy Reports

Category: AI Safety & Security

⚠️ Child advocacy nonprofit Fairplay issues an advisory urging people to avoid buying AI toys for children this holiday season, warning that chatbot-enabled dolls, plushies, and robots can prey on kids’ trust, disrupt human relationships, and impact healthy development.

🔐 Advocacy groups and PIRG’s “Trouble in Toyland” report highlight risks such as AI toys sharing sexually explicit or dangerous content, offering questionable advice, and collecting extensive sensitive data about children, including their voices, identities, and preferences.

🛡️ Toy makers and AI companies, including OpenAI, Curio, Miko, and industry body The Toy Association, respond by emphasizing guardrails, local data processing, parental controls, compliance with privacy laws like COPPA, and safety guidelines for purchasing AI-enabled toys.

With Gemini 3, Google Is Flexing Its Biggest Advantage Over OpenAI

Category: Business & Market Trends

🔗 Google launches Gemini 3 and immediately integrates the Pro model across its ecosystem, including Google Search via an “AI mode,” giving users direct access without needing a separate app or site.

🏗️ Google leverages its “full-stack” advantage—DeepMind research, in-house TPU chips, Google Cloud, and distribution through products like Search, YouTube, and Gemini—to control the entire AI pipeline end-to-end, unlike OpenAI which depends heavily on partners.

🧠 Despite Google’s technical and infrastructure edge, OpenAI retains a powerful branding advantage as “ChatGPT” has become synonymous with AI, forcing Google to compete on distribution, pricing, and long-term brand repositioning.