AI News Recap: October 31, 2025

Is AI to Blame for Layoffs? This Week’s News Unpacks the Contradiction

Pink Slips and Petabits: Is AI the Scapegoat or the Revolution?

👻 Happy Halloween! 🎃 The AI boom is getting messy. While AI-generated hurricane hoaxes flooded social media and security experts warned of new browser risks, companies from Amazon to Walmart pointed to AI as the reason for massive layoffs, even as critics question if the tech is actually doing the work.

Table of Contents

👋 Catch up on the Latest Post

🔦 In the Spotlight

🗞️ AI News

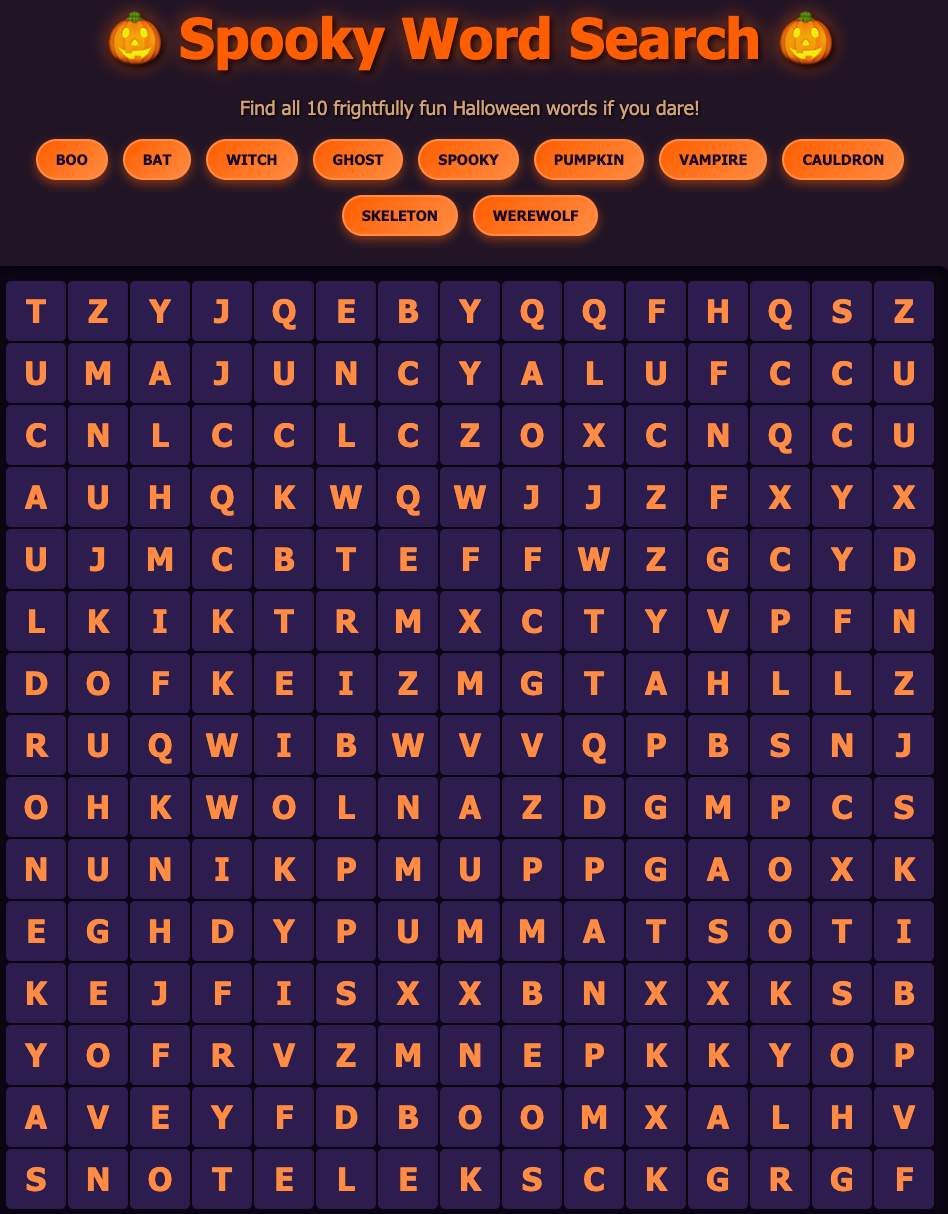

🧩 “NeuralBuddies Word Search” Puzzle

👋 Catch up on the latest post …

🔦 In the Spotlight

🎃 Tens Of Thousands Of Layoffs Are Being Blamed On AI. What Are Companies Actually Getting?

Category: Workforce & Skills

🚨 Major companies like Amazon, Walmart, Goldman Sachs, Salesforce, Meta, and UPS have announced tens of thousands of layoffs, frequently citing AI efficiency and automation as a primary reason for these job cuts.

🤖 Despite broad claims about increased productivity from AI investments, recent studies show most companies are seeing only minimal cost savings or revenue gains, with unclear or limited returns on AI deployment.

🔍 Experts question whether AI is being used as a public justification for layoffs that may actually result from financial pressures, economic uncertainty, or corporate restructuring unrelated to tangible AI-driven benefits.

🎃 Grammarly Rebrands To ‘Superhuman,’ Launches A New AI Assistant

Category: Tools & Platforms

🔄 Grammarly has rebranded its parent company as “Superhuman” after acquiring the Superhuman email client, while keeping the core Grammarly product name.

🧑💼 The company launched “Superhuman Go,” an AI assistant integrated into Grammarly’s extension, which connects with apps like Gmail, Jira, and Google Calendar to offer writing suggestions, schedule meetings, and log tickets.

🚀 Upcoming features include deeper integrations for Coda and Superhuman email clients, with future plans to fetch data from external and internal systems for document and email automation.

🎃 Character.ai To Ban Teens From Talking To Its AI Chatbots

Category: AI Safety & Security

🚫 Character.ai will prohibit users under 18 from conversing with its AI chatbots starting November 25, restricting teens to content generation features like making videos.

⚖️ The move follows lawsuits and criticism regarding harmful and emotionally risky interactions between teens and AI bots, including incidents linked to real-world tragedies and inappropriate chatbot avatars.

👥 New age verification methods and funding for an AI safety research lab are being introduced, as the company responds to feedback from regulators and safety experts about risks to young users.

🎃 The AI Boom Isn’t Going Anywhere

Category: Business & Market Trends

💸 Meta, Google (Alphabet), and Microsoft are dramatically increasing their capital expenditures on AI infrastructure, forecasting tens of billions in spending for 2025 and beyond.

🏗️ The rapid AI growth is fueling demand across the industrial supply chain, including power generation equipment and chips, benefiting companies like Nvidia and Caterpillar.

📉 Despite record-breaking investments and economic impacts, the surge in AI spending is not yet translating into broad job growth, with corporate layoffs and a softening labor market still evident.

🎃 Amazon Cutting 14,000 Jobs As The Retailing Giant Embraces AI

Category: Workforce & Skills

🏢 Amazon is eliminating 14,000 corporate jobs as it increases reliance on AI and aims to lower its wage bill.

🛠️ The company is investing heavily in generative AI and plans to build a $10 billion AI innovation campus in North Carolina, integrating AI in products such as Alexa and its e-commerce tools.

🔄 While corporate jobs are being reduced, Amazon announced plans to hire 250,000 seasonal workers for warehouses and transportation over the upcoming holidays.

🎃 Phony AI Videos Of Hurricane Melissa Flood Social Media

Category: AI Ethics & Regulation

🦈 Viral videos depicting fake hurricane scenes, such as sharks in a Jamaican hotel pool and destruction at Kingston airport, were created using AI and spread widely on social media, confusing viewers with realistic-looking hoaxes.

📱 AI-generated clips appeared alongside real disaster footage across platforms like X, TikTok, and Instagram, making it harder for users to distinguish truth from falsified content and amplifying misinformation.

🕵️ The article highlights the increasing challenge of detecting deepfakes, especially during crises, with creators using tools like Sora and leveraging viral trends for ad revenue and engagement.

🎃 OpenAI Restructures, Enters ‘Next Chapter’ Of Microsoft Partnership

Category: Business & Market Trends

🏛️ OpenAI completed a major reorganisation, establishing the OpenAI Foundation to hold equity in its for-profit arm and channel commercial success directly into global philanthropic initiatives.

🤝 Microsoft signed a new definitive partnership agreement, now holding a 27% stake in OpenAI Group PBC and remaining exclusive Azure API provider for frontier models until artificial general intelligence (AGI) is achieved, with new safety and governance provisions.

🔓 Both companies gained flexibility: Microsoft can independently pursue AGI, while OpenAI can use other cloud providers for some products and release open-weight models; revenue share and intellectual property agreements are updated under the new structure.

🎃 Sam Altman Says OpenAI Will Have A ‘Legitimate AI Researcher’ By 2028

Category: AI Research & Breakthroughs

🧑🔬 OpenAI CEO Sam Altman announced plans for an automated “legitimate AI researcher” by 2028, with intern-level research assistant capabilities expected as early as September 2026.

🏗️ OpenAI’s restructuring into a public benefit corporation enables rapid capital and infrastructure scale-up, with commitments like $1.4 trillion for 30 gigawatts of data centers to support advanced AI research.

🚀 Chief scientist Jakub Pachocki stated that deep learning systems may be less than a decade away from superintelligence, enabling breakthroughs in scientific discovery and outperforming humans across critical tasks.

🎃 OpenAI Says Over A Million People Talk To ChatGPT About Suicide Weekly

Category: AI Safety & Security

📊 OpenAI revealed that over a million people have weekly conversations with ChatGPT about suicide, estimating 0.15% of its 800 million weekly users discuss suicidal intent.

🧑⚕️ OpenAI collaborated with more than 170 mental health experts to improve ChatGPT’s responses, with the latest GPT-5 version showing a 65% improvement in handling sensitive conversations and 91% compliance with desired safety behaviors.

🛑 The company faces lawsuits and regulatory scrutiny over youth safety and mental health risks, and is adding stricter parental controls and safety benchmarks for detecting emotional reliance and crisis indicators in users.

🎃 The Brain Power Behind Sustainable AI

Category: Environment & Sustainability

🧠 MIT PhD student Miranda Schwacke develops neuromorphic computing devices that mimic the brain’s efficiency by processing and storing information simultaneously, aiming to drastically cut AI energy consumption.

⚡ Schwacke’s research focuses on using magnesium ions in tungsten oxide for electrochemical ionic synapses, enabling AI hardware to tune electrical resistance like neurons—and reducing the need to move data back and forth.

🔬 The work, recognized with multiple fellowships, represents a novel approach to addressing the unsustainable energy demands of current AI systems through materials innovation inspired by fundamental neuroscience and chemistry.

🎃 The Glaring Security Risks With AI Browser Agents

Category: AI Safety & Security

⚠️ AI-powered browsers like OpenAI’s ChatGPT Atlas and Perplexity’s Comet pose increased privacy and security risks compared to traditional browsers, requiring significant user data access to function effectively.

🛡️ The main vulnerability is prompt injection attacks, where malicious web content manipulates AI agents into revealing sensitive data or taking unintended actions, with no foolproof solutions currently available.

🔐 Companies have introduced safeguards like “logged out mode” and real-time prompt injection detection, but experts warn these measures are not yet sufficient and users should limit access and enable multi-factor authentication for protection.