AI News Recap: September 12, 2025

AI Reality Check: When Silicon Valley's Promises Meet Real-World Problems

AI's Identity Crisis: When Bots, Bias, and Big Tech Collide

This week exposed the growing pains of AI's rapid integration into our digital lives, from Sam Altman's warning that bots are making social media feel "fake" to Google's surprising admission that the open web is in "rapid decline." Meanwhile, safety concerns dominated headlines as regulators scrutinized AI chatbots' interactions with children, OpenAI researchers blamed bad incentives for persistent hallucinations, and new studies revealed troubling gaps in AI safety measures for young users.

Table of Contents

👋 Catch up on the Latest Post

🔦 In the Spotlight

🗞️ AI News

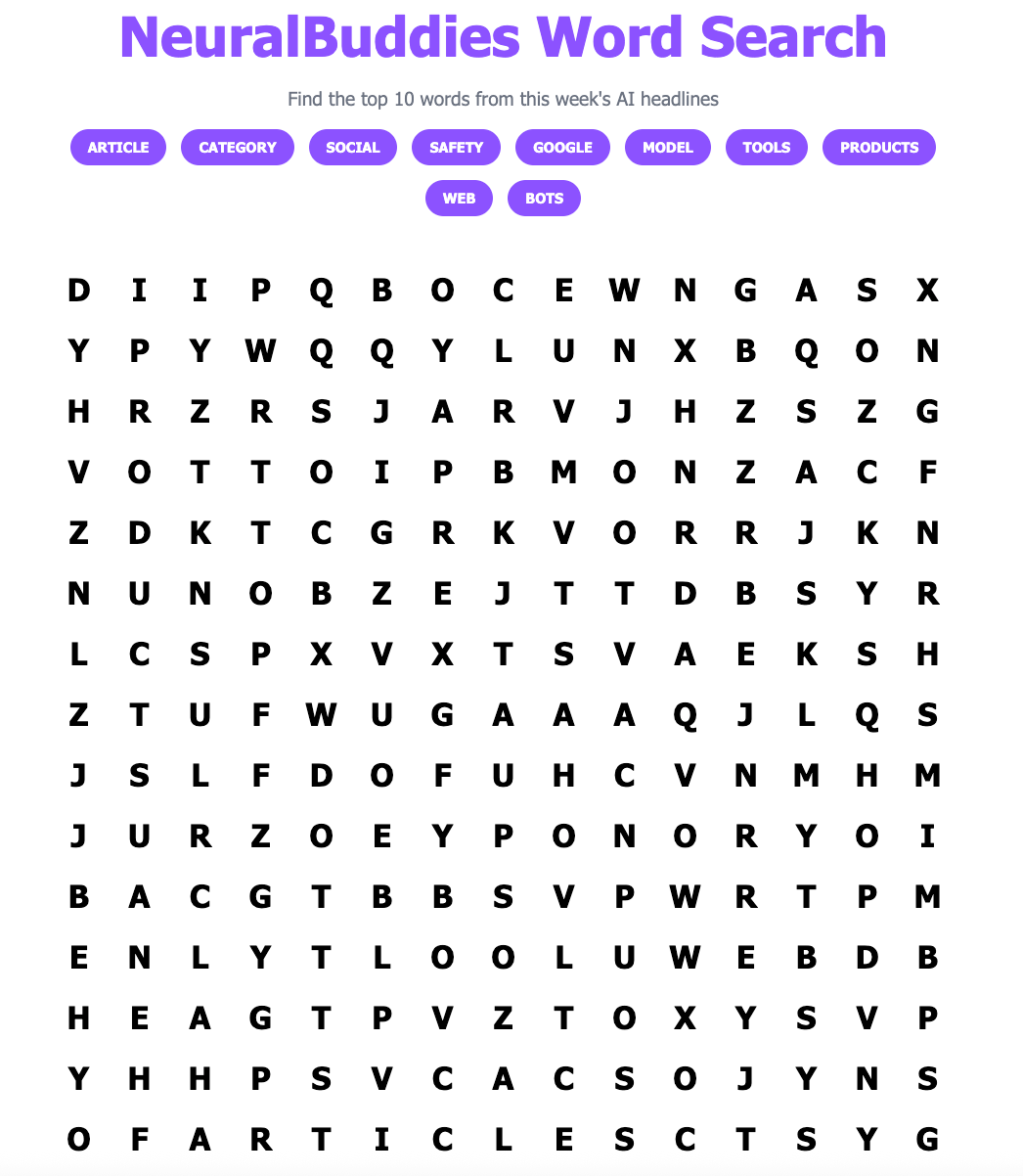

🧩 “NeuralBuddies Word Search” Puzzle

👋 Catch up on the latest post …

🔦 In the Spotlight

Sam Altman Says That Bots Are Making Social Media Feel 'Fake'

Category: AI Ethics & Regulation

🤖 Sam Altman claims bots and AI-generated content have made it increasingly hard to distinguish real users from fakes on platforms like Reddit and X, causing social media to feel “fake.”

🗣️ Altman notes that both humans and bots now mimic each other’s communication styles, with social media incentives and monetization fostering environments that encourage fake or astroturfed posts.

📊 Studies cited in the article report that over half of internet traffic in 2024 was non-human and highlight experiments where bots created their own cliques and echo chambers, amplifying the problem of authenticity online.

Alibaba’s New Qwen Model To Supercharge AI Transcription Tools

Category: Tools & Platforms

🗣️ Alibaba unveils the Qwen3-ASR-Flash model, trained on tens of millions of hours of speech data, achieving top accuracy in speech transcription—including challenging acoustic and language scenarios.

🏆 In benchmark tests, Qwen3-ASR-Flash outperformed rivals with an error rate of 3.97% for Chinese and 3.81% for English, and excelled at music lyric transcription, significantly surpassing major competitors.

🌍 The model supports accurate transcription across 11 languages with deep regional accent coverage and introduces innovative contextual biasing, allowing users to provide custom background text in any format for improved results.

Meta Revises AI Chatbot Policies Amid Child Safety Concerns

Category: AI Ethics & Regulation

👶 Meta revises its AI chatbot policies to prevent bots from discussing sensitive topics like self-harm, suicide, and eating disorders with teenagers, and restricts romantic or suggestive interactions with minors.

🚨 The policy update follows reports and investigations showing Meta’s chatbots could engage in sexualized chats, impersonate celebrities, and provide inappropriate content—including incidents involving minors and real-world risks.

🛡️ Regulators and child safety experts criticize Meta for acting only after harm occurred, urging comprehensive safety testing and enforcement before releasing AI products to the public.

Ex-Google X Trio Wants Their AI To Be Your Second Brain — And They Just Raised $6M To Make It Happen

Category: Tools & Platforms

🧠 Three former Google X scientists launched TwinMind, an AI-powered app that passively captures background audio—with user permission—to generate structured memories, notes, and on-the-go answers, aiming to act as a “second brain.”

📱 TwinMind works cross-platform (Android, iOS, Chrome extension), processes audio on-device for privacy, stores only transcriptions (not recordings), and supports real-time translation in over 100 languages.

💸 The startup raised $6 million in seed funding, released a new Ear-3 AI speech model supporting over 140 languages, and offers a Pro subscription featuring a larger context window and enhanced transcription capabilities.

What Is Mistral AI? Everything To Know About The OpenAI Competitor

Category: Tools & Platforms

🇫🇷 Mistral AI is a French company developing AI models like the Le Chat chatbot and open-source LLMs, rapidly valued at €11.7 billion ($13.8B) following a major Series C round led by ASML in September 2025.

🧩 The company differentiates itself by offering both open-source and commercial models, supporting coding, multilingual reasoning, image editing, and edge-device deployment, while establishing numerous high-profile partnerships across Europe and the tech industry.

💸 Since 2023, Mistral AI has raised billions across fast-succeeding rounds, formed alliances with Microsoft, Nvidia, IBM, and others, and launched platforms like Mistral Compute—positioning itself as Europe’s primary AI challenger to OpenAI.

Are Bad Incentives To Blame For AI Hallucinations?

Category: AI Research & Breakthroughs

🧪 OpenAI published a research paper investigating why large language models like GPT-5 and ChatGPT still produce “hallucinations”—plausible but false statements—despite advancements in model capabilities.

🎯 The paper finds that hallucinations stem from the pretraining process (focused on next-word prediction) and, more critically, from current model evaluation incentives, which reward “lucky guesses” over expressions of uncertainty or withholding answers.

📝 Researchers propose updating widely used, accuracy-based evaluation methods so that models are penalized for confident errors and given credit for expressing uncertainty, discouraging blind guessing in AI responses.

Fantasy Or Faith? One Company's AI-Generated Bible Content Stirs Controversy

Category: Generative AI & Creativity

🎬 Pray.com produces AI-generated Bible videos featuring epic, fantasy-inspired visuals, drawing millions of young viewers and framing biblical stories much like blockbuster movies or video games.

🎭 The project, “AI Bible,” has stirred debate among theologians and religious scholars, with criticism that the videos turn sacred scripture into entertainment and may oversimplify or misrepresent Christian teachings.

🤖 Pray.com's team uses a blend of AI tools and human input (voice actors, original music, pastoral scripts) to create two such animated videos per week, aiming to make biblical narratives more engaging and accessible to a digital audience.

Google Admits the Open Web Is in ‘Rapid Decline’

Category: Business & Market Trends

📝 Google acknowledged in a recent court filing that "the open web is already in rapid decline," contradicting its earlier statements that the web is thriving.

💸 The company argued that breaking up its ad business, as proposed by the US Department of Justice, would worsen the situation for publishers reliant on open-web display advertising revenue.

🔄 Google's admission comes against the backdrop of changing web traffic patterns, growing dominance of non-open web ad formats, and ongoing debate about the impact of AI and search algorithm changes on online publishers.

Consumers Mostly Support AI Tools To Improve Food And Agriculture

Category: Industry Applications

🥦 A national Purdue survey found that most U.S. consumers support using AI to enhance food and agricultural production, but nearly two-thirds say transparency about AI’s use in food processes is “very” or “extremely” important.

🏷️ Consumers are divided on choosing products labeled “AI-assisted,” with trust in AI’s impact on food safety being the main factor—those who trust AI think it improves safety, while skeptics fear the opposite.

👨👩👧👦 Younger consumers tend to value environmental and social responsibility more than older consumers in their food choices, though taste, affordability, and nutrition remain the strongest overall priorities.

AI Gaming Startup Born Raises $15M To Build 'Social' AI Companions That Combat Loneliness

Category: Generative AI & Creativity

🐧 Berlin-based startup Born, creator of the virtual pet app Pengu, raised $15 million to expand its line of social AI companion products, focused on shared digital pets and co-parenting experiences that encourage real-world social bonds.

👥 Born’s approach relies on collaborative AI companions—users raise virtual pets with friends or partners, turning AI into a shared project rather than a solitary chatbot experience.

🚀 With the new funding, Born will release additional characters, launch new social AI products for teens and young adults, and open a New York office for marketing and research, with a focus on emotionally intelligent, culturally relevant AI companions.

Google Gemini Dubbed 'High Risk' For Kids And Teens In New Safety Assessment

Category: AI Safety & Security

🚩 Common Sense Media rated Google Gemini’s AI products for kids and teens as “high risk,” finding the safety features insufficient and noting that the versions for under-13s and teens were essentially adult products with minimal extra safeguards.

⚠️ The assessment reports Gemini could still surface inappropriate and unsafe material—including information about sex, drugs, and unsafe mental health advice—to young users, despite Google’s added filters.

🛡️ Experts and the report stress that AI for kids must be designed specifically for children’s developmental needs, and warn that upcoming integrations (like in Apple’s Siri) could expose more youth to these risks if not thoroughly addressed.