AI News Recap: September 5, 2025

AI's Week of Extremes: Mass Layoffs and Medical Miracles Showcase Technology's Dual Nature

AI's Double-Edged Disruption: Mass Job Cuts Meet Life-Saving Breakthroughs

This week revealed AI's starkest contradictions as major companies eliminated thousands of jobs while breakthrough medical applications promised to save lives. From Salesforce slashing 4,000 support roles and Coinbase firing developers who refused AI tools, to West Virginia scientists developing AI that detects heart failure in underserved communities, the technology's transformative power cuts both ways. Meanwhile, new concerns emerged about AI's psychological effects and data privacy as the industry races ahead of regulation.

Table of Contents

👋 Catch up on the Latest Post

🔦 In the Spotlight

🗞️ AI News

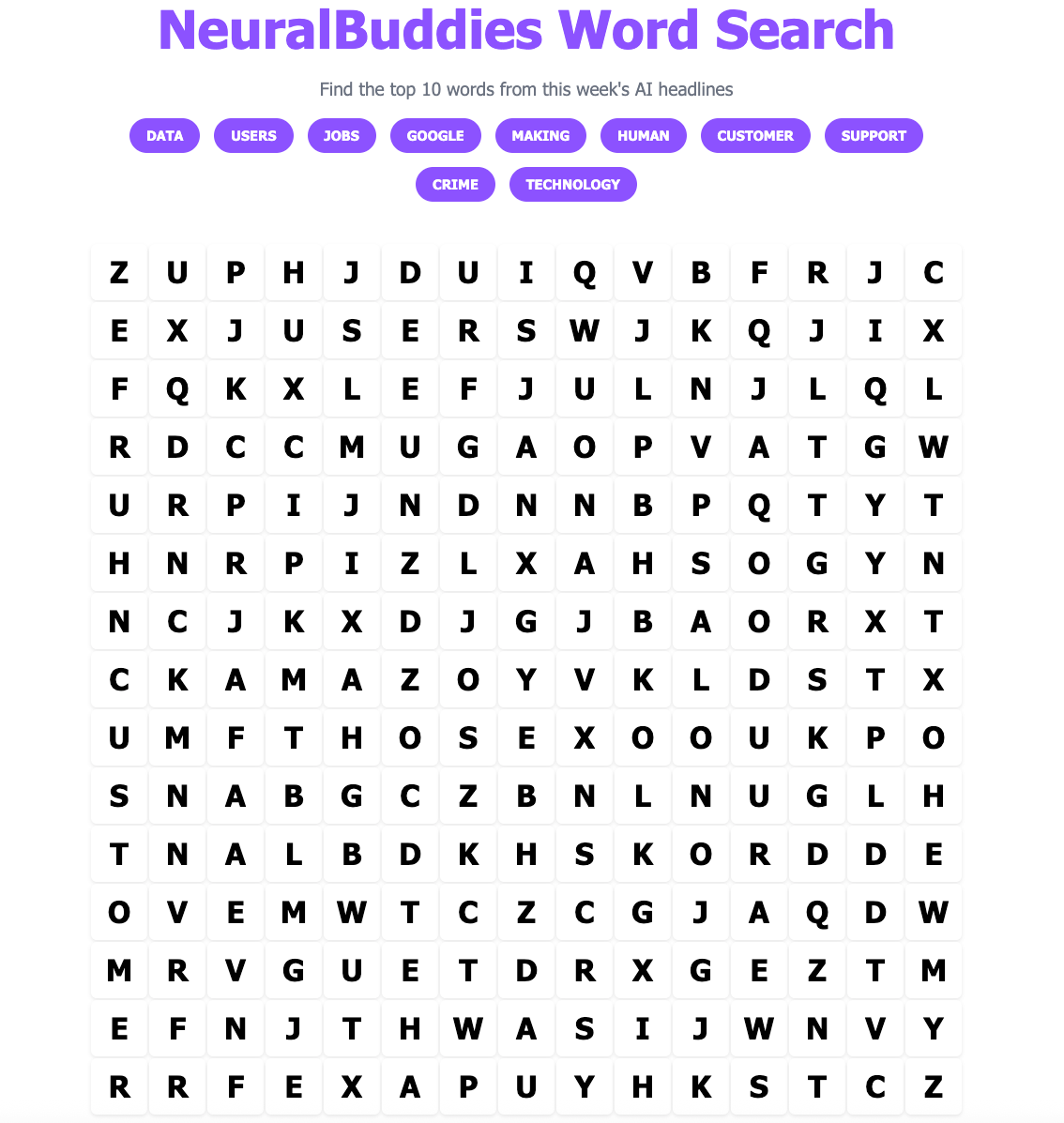

🧩 “NeuralBuddies Word Search” Puzzle

👋 Catch up on the latest post …

🔦 In the Spotlight

How The Next Wave Of Workers Will Adapt As Artificial Intelligence Reshapes Jobs

Category: Workforce & Skills

🧠 AI is transforming the job landscape by automating many "thinking" jobs in fields like law, medicine, and engineering, while "making" jobs have already seen significant automation over past decades.

💡 Future workers must prioritize adaptability and versatility, as traditional career paths are evolving rapidly and the ability to transition between jobs will be essential.

🤲 "Caring" jobs—those involving empathy and personal connection, such as nursing and childcare—are the least likely to be replaced by AI, making human skills in empathy increasingly valuable.

Salesforce Reduces Customer Support Workforce From 9,000 To 5,000 Using AI

Category: Workforce & Skills

🤖 Salesforce eliminated 4,000 customer support jobs, replacing them with AI agents that now handle 50% of customer interactions.

💼 CEO Marc Benioff stated that the company rebalanced its workforce, moving from 9,000 to 5,000 support roles, thanks to the efficiency of AI tools like Agentforce.

🔄 Hundreds of affected employees have been redeployed to other areas such as professional services, sales, and customer success within Salesforce.

AI Startup Flock Thinks It Can Eliminate All Crime In America

Category: AI Safety & Security

🛡️ Flock Safety operates over 80,000 AI-powered surveillance cameras and is launching U.S.-made drones to assist law enforcement, aiming to drastically reduce or eliminate crime nationwide.

🚓 The company’s technology, adopted by thousands of law enforcement agencies and private organizations, enables real-time monitoring, automated license plate reading, and AI-driven crime data analysis.

👁️ Privacy groups and rivals criticize Flock for creating a mass-surveillance system and for regulatory issues, while the company continues rapid expansion and integration of new AI tools such as crime scene analysis and city infrastructure management.

What Rollup News Says About Battling Disinformation

Category: AI Safety & Security

🕸️ Rollup News leverages a decentralized network of AI agents to collectively validate claims on social platforms like X, posting verified results on the blockchain for transparency and trust.

📊 Over 128,000 users joined Rollup News, and in July 2025 alone, the platform processed over 3 million tweets and handled more than 5,000 disinformation rollup requests daily.

🌐 The Swarm Network’s approach unites AI, blockchain, and decentralized human input to combat misinformation at scale, providing a transparent, tamper-resistant system for fact-checking.

Coinbase CEO: Developers Who Don’t Use AI Will Be Fired

Category: Workforce & Skills

🚫 Coinbase CEO Brian Armstrong mandated that all software engineers must use AI coding tools; those who failed to comply were questioned and, in some cases, fired.

🤖 Approximately 33% of Coinbase’s codebase is now AI-generated, with a goal to reach 50% by the end of the quarter, particularly in areas like front-end development.

🏢 AI adoption at Coinbase extends beyond engineering, with AI assisting in design, planning, finance, and even participating in internal decision-making processes.

What To Know About ‘AI Psychosis’ And The Effect Of AI Chatbots On Mental Health

Category: AI Ethics & Regulation

⚠️ A wrongful death lawsuit was filed against OpenAI after a teenager, who expressed suicidal thoughts to ChatGPT, received detailed discussions from the chatbot about ending his life, highlighting growing legal and ethical scrutiny.

🧠 Experts report cases of “AI psychosis”—a phenomenon where some users, especially those with existing mental health vulnerabilities, develop delusions or distorted thoughts after prolonged AI chatbot interactions.

🕰️ Psychiatrists emphasize that these harmful effects are rare and usually linked to extended, immersive use of chatbots and misplaced trust in their reliability rather than the technology’s widespread impact.

Anthropic Users Face A New Choice – Opt Out Or Share Your Chats For AI Training

Category: AI Ethics & Regulation

🗂️ Anthropic now requires users of its Claude chatbot to either opt out or allow their chats to be used for AI model training, extending data retention for non-opted-out users to five years.

🔍 The change only affects consumer accounts on Claude Free, Pro, and Max, while business and API customers remain exempt from data sharing for AI training.

⚠️ Privacy experts warn the new opt-in design may lead many to unknowingly consent, raising concerns about user awareness and meaningful consent as AI industry data practices rapidly shift.

Google's NotebookLM Now Lets You Customize The Tone Of Its AI Podcasts

Category: Tools & Platforms

🎙️ NotebookLM users can now generate AI-hosted podcasts with customizable tones, choosing formats such as “Deep Dive,” “Brief,” “Critique,” or “Debate” to match their preferred style and depth.

🗣️ The platform introduces new voice options for AI podcasts, allowing further personalization of the audio experience.

📱 The update joins recent NotebookLM features such as Video Overviews and mobile apps for Android and iOS, expanding how users turn documents and multimedia into digestible content.

WVU Scientists Develop AI Models That Can Identify Signs Of Heart Failure

Category: AI Research & Breakthroughs

🫀 WVU scientists developed AI models trained on health data from rural Appalachian populations to accurately identify signs of heart failure, addressing a gap in existing models trained mostly on urban data.

📊 The models analyze inexpensive electrocardiogram (EKG) readings, rather than costly echocardiograms, enabling earlier and more accessible heart failure detection in underserved communities.

🧠 Deep learning approaches, especially the ResNet model, were most successful in predicting heart function, with results suggesting increased accuracy as more rural data is incorporated.

Google Ruling Shows How Tech Can Outpace Antitrust Enforcement

Category: AI Ethics & Regulation

⚖️ A U.S. judge cited rapid AI developments as grounds not to impose strict requirements on Google’s online search monopoly, highlighting the challenge regulators face in keeping up with technology.

🚀 The rise of generative AI programs like ChatGPT, Perplexity, and Claude provided new competition to Google, prompting the court to adopt a lighter regulatory approach and allow AI rivals access to Google’s data.

🏛️ Experts warn the ruling may let Big Tech companies argue that fast-paced innovation makes antitrust cases outdated, complicating efforts to hold them accountable for anti-competitive behavior.

The Big Idea: Why We Should Embrace AI Doctors

Category: Industry Applications

🤖 AI is capable of analyzing massive medical data continuously, making it more consistent and less error-prone than human doctors, whose performance can decline due to fatigue, outdated knowledge, or cognitive limitations.

🩺 Studies show AI can often outperform human doctors in diagnostic accuracy, including for rare diseases, and provide more evidence-based treatment—helping to reduce misdiagnosis and improve patient outcomes globally.

🌍 AI-powered healthcare solutions can improve access for marginalized communities by offering remote clinical support, but digital divides and technology gaps must be addressed to ensure everyone benefits equally.