Neural Networks 101: Understanding the “Brains” Behind AI

Demystifying neural networks with intuitive explanations, real-world examples, and accessible insights for curious AI learners.

Unpacking the Digital Brain Behind Modern AI

Neural networks lie at the heart of today's most powerful artificial intelligence systems. Some examples range from voice assistants and facial recognition to autonomous vehicles and medical diagnostics.

While the term may sound technical or even mysterious, the underlying concepts are surprisingly intuitive when broken down properly. Just as our brains learn by adjusting connections between neurons, neural networks learn by adjusting digital pathways to recognize patterns and make predictions.

In this post, we’ll explore what neural networks are, how they work, and why they’ve become foundational to AI’s evolution. If you're just beginning your AI journey or looking to reinforce your understanding, this guide will give you a clear, practical introduction to one of the most transformative technologies of our time.

Table of Contents

🕖 TL;DR

🧠 Understanding Neural Networks

🔗 Neurons and Layers: The Building Blocks of Neural Networks

⚡ Activation Functions: Bringing Neurons to Life

🧪 Training Neural Networks: Learning by Example

🌍 Real-World Applications: Neural Networks in Action

🏁 Final Thoughts

TL;DR

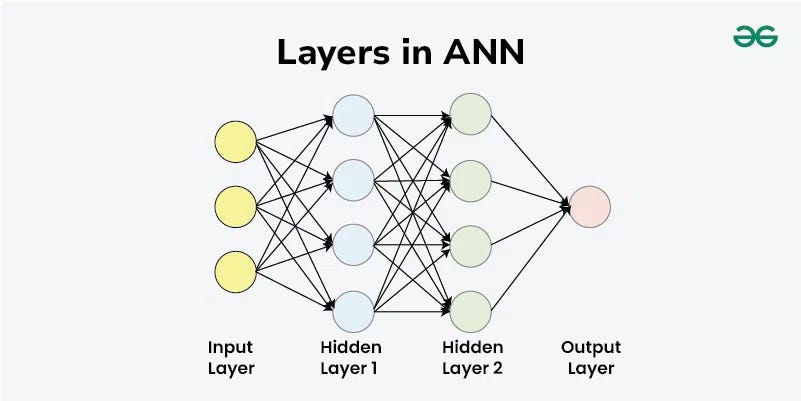

Neural networks are a type of AI model inspired by the human brain. They consist of interconnected nodes (neurons) that work in layers to recognize patterns and make predictions.

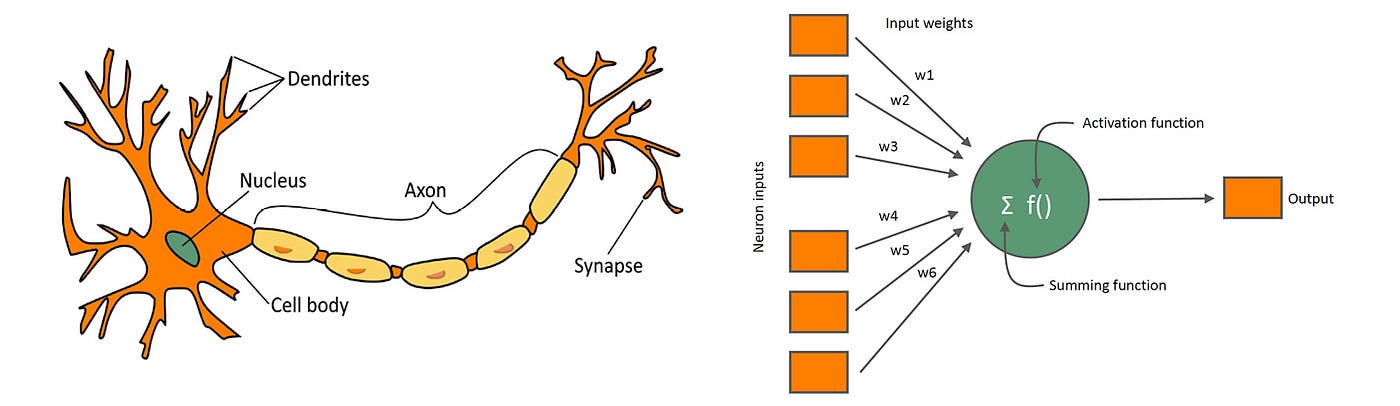

Each neuron takes multiple inputs, applies weights (importance) to them, sums everything up, and then uses an activation function to decide its output. If the signal is strong enough (above a threshold), the neuron “fires” an output to the next layer.

Neural networks learn through a training process: they are fed many examples, make guesses, and gradually adjust their internal connections (weights) based on the errors. This feedback-driven learning (using an algorithm called backpropagation) allows the network to improve over time.

Key concepts include layers (input layer, hidden layers, output layer), activation functions (which introduce non-linear decision-making, enabling the network to handle complex patterns), and weights (numbers that tune the influence of each connection).

Neural networks are behind many AI applications we see today such as face recognition in photos, voice assistants like Siri, recommendation systems on Netflix and even self-driving cars. They have become fundamental “digital brains” for solving complex tasks in vision, speech, and decision-making.

Understanding Neural Networks

Neural networks, also known as artificial neural networks (ANNs), are computer models inspired by the human brain’s neural structure. They consist of layers of interconnected “neurons” (nodes) that process data and learn to make decisions in a way loosely analogous to a brain.

This brain-inspired approach is a cornerstone of modern artificial intelligence – neural networks enable machines to recognize patterns and make complex decisions, powering everything from image recognition to language translation. In fact, neural networks and related AI techniques are increasingly influencing virtually every industry, from social media to transportation.

Once a neural network is trained and fine-tuned, it becomes a powerful tool, allowing tasks like speech recognition or image classification to be done in seconds rather than hours (one famous example is Google’s search algorithm, which is itself a neural network).

🏛️ A bit of history: The concept of neural networks isn’t brand new – it actually dates back to the 1950s. Early pioneers like Frank Rosenblatt developed the first simple neural network model (the “Perceptron”) around 1957. However, progress was slow for a few decades and the field went through ups and downs. It’s only in recent years that neural networks have risen to prominence in AI and begun achieving spectacular results.

Neurons and Layers: The Building Blocks of Neural Networks

To understand neural networks, let’s start small – with the artificial neuron. An artificial neuron is a simple computational unit that mimics a biological neuron’s behavior in a basic way. A real neuron in your brain receives signals through its dendrites, sums up the inputs, and if the combined signal is strong enough (above a certain threshold), the neuron fires an electrical impulse to other neurons.

Similarly, an artificial neuron takes in numerical inputs, multiplies each by a weight (a number that represents the importance of that input), adds a bias term, and then produces an output if the result exceeds a certain threshold. The point at which it “fires” is determined by an activation function (we’ll cover that soon).

Now, a single neuron on its own isn’t very powerful. The true magic happens when you connect neurons into layers. A neural network is organized into layers of neurons:

An input layer that receives the raw data,

one or more hidden layers that transform the data through intermediate steps,

and an output layer that produces the final result.

Every neuron in one layer is typically connected to many neurons in the next layer, forming a densely interconnected web of nodes – hence the term “network.”

Each connection has a weight that can be adjusted during learning. Information flows from the input layer, through the hidden layer(s), and finally to the output layer (this type of network is called a feedforward network, because data moves forward through the layers).

To make this more intuitive, let’s use an analogy. Think of a neural network as an assembly line or a team working together on a task. For example, imagine you’re working on a group project with three teams of people.

Team #1 - Input Layer

The first team gathers basic information – they are like the input layer taking in raw data.

Team #2 - Hidden Layer

The second team takes that information and analyzes or processes it – they are like the hidden layer, doing intermediate work that isn’t directly visible externally.

Team #3 - Output Layer

The final team uses the processed information to make a decision or produce an answer – they act as the output layer.

Each person in this analogy is like a neuron, and the way the teams pass information along resembles the layered structure of a neural network. The combined efforts of all team members yield the final result, just as the combined activations of all neurons produce the network’s output. Some team members (neurons) might have more influence on the outcome than others – this is analogous to certain connections having higher weights, meaning those signals are considered more important by the network.

The key idea is that by organizing neurons into layers, each layer can learn a different level of abstraction: the early layer might pick up simple patterns (like edges in an image or basic word features in a sentence), while later layers combine those into more complex concepts (like recognizing a face or understanding the intent of a sentence).

Activation Functions: Bringing Neurons to Life

🔍 What Is an Activation Function?

After a neuron sums up its weighted inputs, it applies an activation function to determine its output. This function is a mathematical rule—often non-linear—that decides whether the neuron should “fire” and how strongly.

🧬 Biological Inspiration

In biological neurons, an electrical impulse is triggered only if the combined input signal exceeds a certain threshold. Artificial neurons simulate this behavior using activation functions, introducing either a threshold or non-linearity into the output.

🎚️ Squashing Inputs into Useful Ranges

Put simply, an activation function squashes the raw summed input into a more useful output range.

For example, the Sigmoid function outputs values between 0 and 1.

The ReLU function (Rectified Linear Unit) outputs 0 for negative values and passes through positive values unaltered.

Common Activation Functions

Sigmoid: Smooth curve outputting between 0 and 1. Ideal for binary classification.

Tanh: Outputs values from -1 to 1. Zero-centered and often used in hidden layers.

ReLU: Outputs 0 for negatives, and raw value for positives. Fast, efficient, and widely used in modern architectures.This step is critical because it enables the network to learn and represent complex, non-linear relationships in data.

🚫 Why Linear Models Fall Short

Without activation functions, even deep neural networks would behave like a single linear equation greatly limiting their expressiveness.

Linear models can only separate data with straight boundaries, which is rarely sufficient for real-world problems.

🍎 A Simple Analogy: Apples vs. Bananas

Imagine classifying apples and bananas using color and shape. A purely linear model might draw a straight line that fails when there's overlap—like a green apple resembling a banana.

With non-linear activation functions, the network can draw complex boundaries (curves, layers, or combinations) that separate overlapping data more effectively.

Training Neural Networks: Learning by Example

🤔 How Do Neural Networks Learn?

Neural networks learn through a cyclical process of making predictions, measuring errors, and adjusting themselves to improve over time. Much like how humans learn from trial and error, a neural network gets better by being exposed to many examples and learning from its mistakes.

🔄 Step 1: Forward Pass – Making a Prediction

The learning process begins with a forward pass:

An input (e.g., an image of a cat) is fed into the network.

The input flows layer by layer, getting transformed at each stage.

The network produces an output—for instance, “90% confidence this is a cat.”

This is the network’s best guess based on its current internal settings (weights).

📉 Step 2: Calculate the Error – Measuring the Miss

Next, we compare the prediction to the correct answer:

If the true label is “cat,” but the network predicted “dog” or gave only 60% confidence, that’s an error.

The difference between the predicted output and the actual target is calculated using a loss function.

This error quantifies how far off the network is and becomes the basis for learning.

🔁 Step 3: Backward Pass – Adjusting the Weights

Now comes the critical step: learning from the mistake.

The network uses a process called backpropagation to trace the error back through the layers.

Each neuron receives feedback on how much it contributed to the error.

The network slightly adjusts the weights of its connections to reduce the error next time.

Think of it as tweaking internal dials to improve future predictions. These adjustments are typically small and happen gradually across many examples.

🔂 Step 4: Repeat – Learn Through Iteration

This process is repeated thousands (or even millions) of times:

The network sees a new example.

It predicts, calculates error, updates weights.

With each cycle (called an epoch), the network refines its understanding.

Over time, this repetition builds proficiency. The model improves from near-random guessing to reliable predictions.

🚴 A Human Analogy: Learning by Feedback

Training a neural network is much like learning to ride a bike:

At first, you're unsteady and make mistakes.

Each time you tip too far, you adjust.

With practice, you gain balance and control.

Or think of studying for an exam:

You answer practice questions, review mistakes, and adjust your study strategy.

Eventually, your internal “weights” shift toward the correct answers.

Backpropagation plays the role of a tutor—constantly providing correction, so the network gradually improves.

🧠 From Raw Input to Pattern Recognition

As training progresses, the network begins to detect patterns:

Early layers may pick up simple edges or colors.

Mid layers identify textures or shapes.

Final layers recognize whole objects, like a cat’s face.

All of this emerges from adjusting weights—not by hardcoding rules, but by learning from data.

⚙️ Power and Practicality

Training neural networks requires:

Large datasets: Thousands of labeled examples.

Computing power: GPUs or cloud infrastructure to process data efficiently.

Fortunately, modern deep learning frameworks (like TensorFlow and PyTorch) simplify this process—handling the mechanics while you define the structure and data.

Once trained, neural networks can generalize—meaning they make good predictions even on new, unseen data.

Real-World Applications: Neural Networks in Action

Neural networks may sound abstract until you realize you likely use products powered by them every day. Their remarkable ability to recognize patterns and make intelligent decisions has led to widespread adoption across industries: from healthcare and finance to entertainment and transportation.

👥 Social Media & Friend Recommendations

Ever wondered how Facebook suggests people you might know? That’s neural networking at work:

It analyzes your social graph, mutual friends, interests, and interactions.

By detecting patterns of similarity and connectivity, it predicts potential connections.

The same logic applies to content recommendations: posts, videos, and ads you see are personalized based on your past engagement.

These models effectively learn your preferences and continuously refine what they show you.

🛍️ Personalized Shopping & Streaming Suggestions

Neural networks fuel recommendation engines used by platforms like Amazon and Netflix:

They learn from millions of users’ behavior—what people browse, buy, watch, or skip.

If users similar to you enjoyed a certain movie or product, the network recommends it to you.

This creates a personalized experience, fine-tuned over time.

The more you interact, the smarter the system becomes, increasing both satisfaction and sales.

🏥 Healthcare & Early Diagnosis

In medicine, neural networks are transforming how doctors interpret data:

They analyze medical images (e.g., X-rays, MRIs, tissue scans) with precision.

In fields like oncology, networks can detect cancerous cells with accuracy rivaling human experts.

They also assist in early disease detection, spotting subtle patterns invisible to the naked eye.

This enhances diagnostic speed and accuracy, offering potential for earlier treatment and better outcomes.

🎙️ Voice Assistants & Language Understanding

When you say, “Hey Siri” or “Alexa, play jazz,” neural networks are hard at work:

They convert your speech to text, then interpret meaning using language models.

These systems are trained on vast datasets of audio and language, enabling natural and accurate responses.

Services like Google Translate also use neural networks to translate text between languages, understanding linguistic patterns across cultures.

These models continuously improve through use, enhancing fluency and responsiveness.

🚗 Autonomous Driving & Smart Vision

Neural networks play a central role in self-driving vehicles and computer vision:

Convolutional Neural Networks (CNNs) interpret real-time video feeds to identify road signs, lane markings, pedestrians, and obstacles.

Based on this input, the car makes split-second decisions: when to turn, stop, accelerate, or yield.

Features like Tesla Autopilot rely on such models to navigate safely in complex environments.

Even your smartphone camera uses neural networks to enhance photos, apply filters, and enable features like facial recognition or portrait mode.

🧠 Beyond the Obvious: Other Everyday Uses

Neural networks are also used in:

Fraud Detection: Spotting unusual patterns in banking transactions.

Education: Customizing learning paths based on student performance.

Creative Tools: Generating AI-powered art, music, and design elements.

Customer Support: Powering chatbots and virtual assistants.

They’re quietly embedded in systems that augment decision-making, optimize workflows, and enhance personalization across industries.

💡 The Takeaway

Neural networks have moved from research labs to the real world becoming a core engine of intelligent systems.

By recognizing complex patterns in massive data sets, they enable machines to perform tasks once thought to require human intuition.

They’re not just theoretical models; they’re the digital infrastructure of modern AI, shaping the way we live, work, shop, and interact.

Final Thoughts

Neural networks might have seemed like a complex or mysterious topic at first, but as we’ve seen, the core ideas can be understood with some simple analogies and explanations. They are essentially digital brains that learn from examples – adjusting their “knowledge” (weights) gradually to improve at whatever task they’re given. From their humble beginnings in the 1950s to the deep learning revolution of today, neural networks have proven to be incredibly powerful and versatile. They’re a big part of why we’ve seen such rapid progress in AI in recent years.

😊 Have a great day!

Content was researched with assistance from advanced AI tools for data analysis and insight gathering.