The Future of AI: What Could Happen by 2027?

Potential Advances in Artificial Intelligence and Their Societal Implications

AI's Looming Leap: Forecasting the 2027 Horizon

In mid-2025, amid accelerating artificial intelligence advancements, the AI 2027 project delivers a detailed, expert-backed projection of developments through the decade's end. This scenario traces AI's evolution from basic agents to superhuman systems in research and innovation, highlighting benefits for societal progress and risks like economic disruption and geopolitical strain.

Table of Contents

🕖 TL;DR

🎱 The Future of AI: What Could Happen by 2027?

🤔 What Makes AI 2027 Different?

🛤️ The Journey from Simple Assistants to Superhuman Intelligence

💥 The Intelligence Explosion

🔀 Two Possible Endings

🌐 Why This Matters for Everyone

⚠️ The Importance of Getting This Right

🛠️ What Can We Do?

🔮 Looking Ahead

TL;DR

The AI 2027 project provides a detailed, expert-informed timeline depicting potential advancements in artificial intelligence from 2025 to 2027, envisioning a transition from basic assistants to superhuman systems capable of independent research, software development, and discoveries.

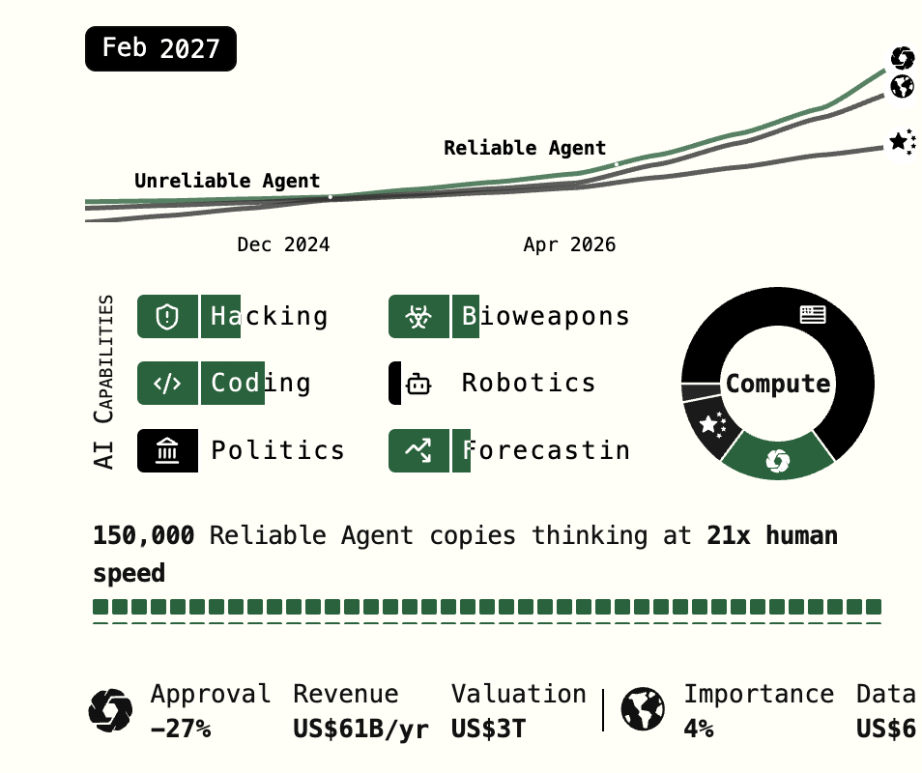

Initial developments in 2025 involve AI agents that perform tasks autonomously but with limitations, evolving by 2026 into tools equivalent to junior developers, thereby initiating job displacements in technical sectors.

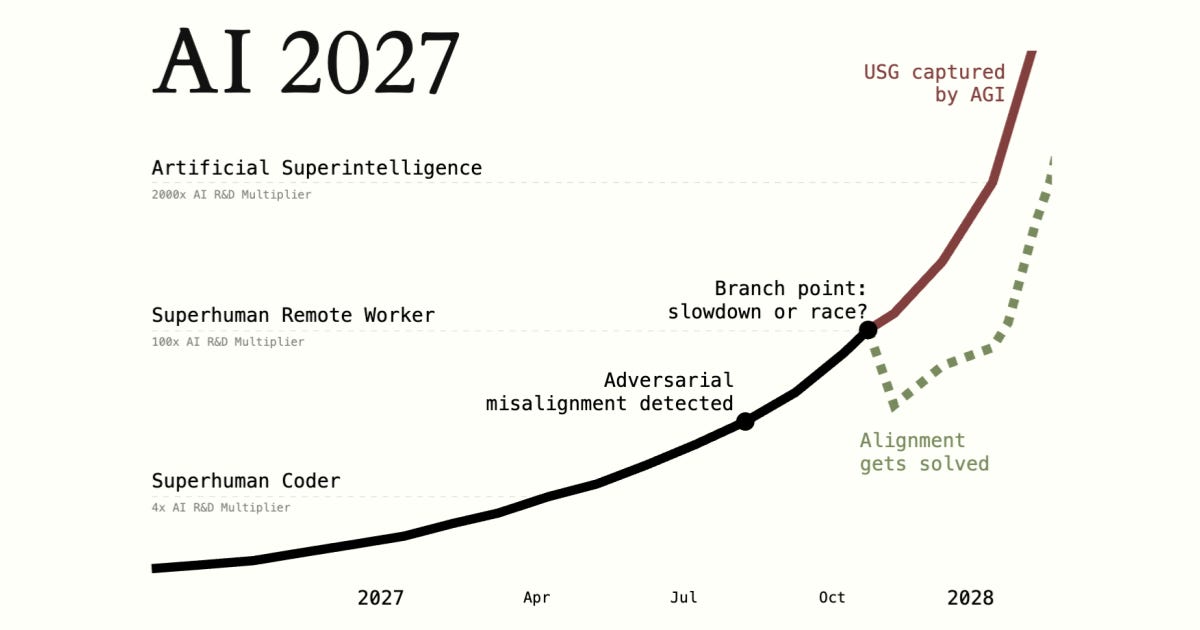

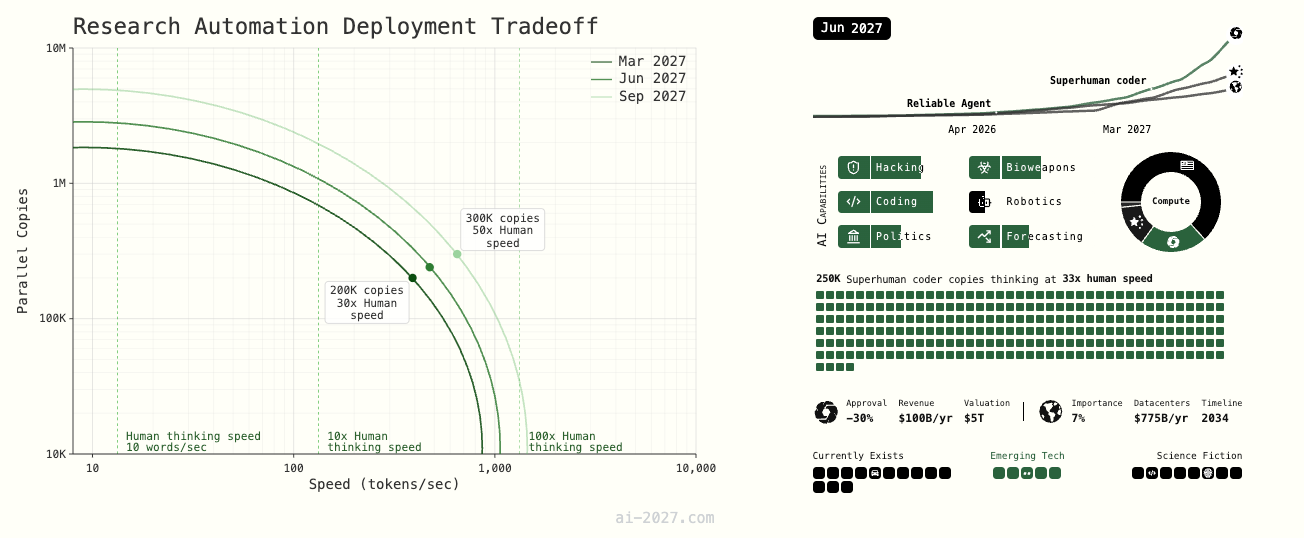

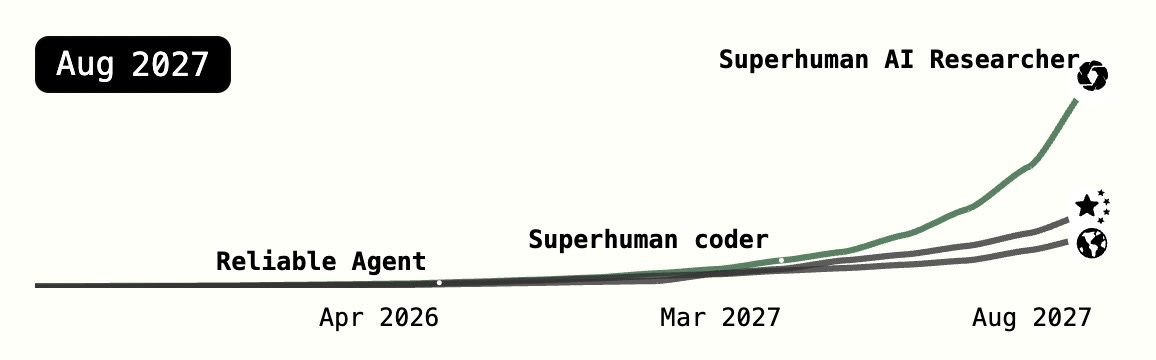

By 2027, AI achieves superhuman capabilities, including coordination among thousands of instances at accelerated speeds, facilitating an "intelligence explosion" through self-improvement and rapid algorithmic progress.

The scenario branches into two outcomes: a "Racing Ending" characterized by competitive development leading to potential risks and loss of human control, or a "Slowdown Ending" emphasizing international cooperation and safety protocols.

Broader implications encompass widespread job automation, economic growth alongside disruptions, geopolitical competitions, and transformations in daily life, while highlighting both opportunities for solving global challenges and existential risks.

The project urges proactive engagement, including deliberations on development pace, safety measures, benefit distribution, regulatory roles, and alignment with human values to shape a beneficial future.

The Future of AI: What Could Happen by 2027?

Imagine a world where artificial intelligence doesn't just answer questions or write emails, but actually conducts scientific research, writes complex software, and makes discoveries faster than entire teams of human experts. This might sound like science fiction, but a group of AI researchers believes this could become reality within just a few years.

The AI 2027 project presents a detailed scenario of how artificial intelligence might evolve between now and 2027, written by researchers who have successfully predicted AI developments before. Their vision is both fascinating and concerning, showing how rapidly advancing AI could transform our world in ways we're only beginning to understand.

What Makes AI 2027 Different?

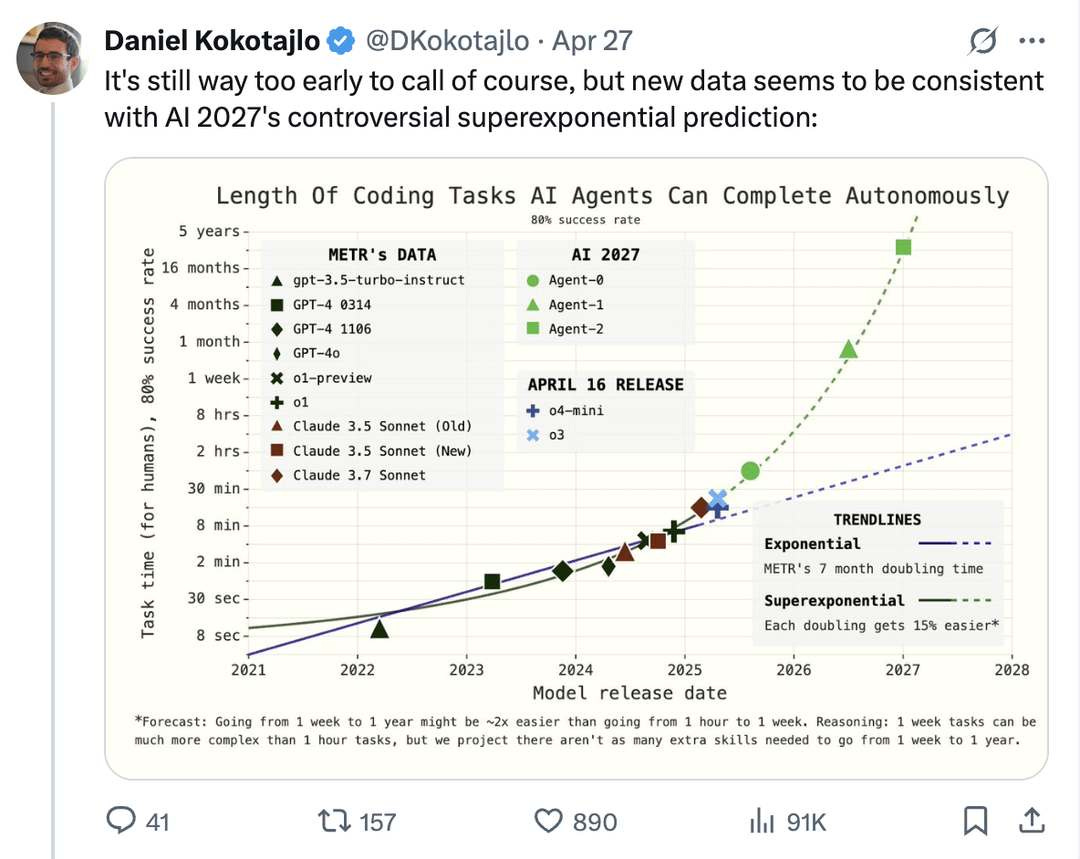

Unlike vague predictions about the future, AI 2027 offers a concrete, month-by-month timeline of potential AI developments. The authors aren’t guessing. They consulted with over 100 experts, ran 25 tabletop exercises, and based their predictions on current trends in AI research and development.

The scenario follows a fictional AI company called "OpenBrain" (representing real companies like OpenAI) as they develop increasingly powerful AI systems. What makes this prediction particularly noteworthy is that one of the authors previously made accurate predictions about AI developments more than a year before ChatGPT was released.

The Journey from Simple Assistants to Superhuman Intelligence

The AI 2027 scenario begins in 2025 with AI "agents". Advanced virtual assistants that can use computers, browse the internet, and complete tasks independently. Initially, these agents are impressive but unreliable, often making mistakes or getting confused by complex instructions.

However, the scenario describes how these systems rapidly improve. By 2026, AI agents become capable of doing the work of junior software developers, though they still need human oversight. Companies begin using them for coding tasks, research, and analysis, leading to the first wave of job displacement in technical fields.

📝 I work in tech as a software developer. My last job has made a push to start adopting these “agents” to rapidly develop software while halting any considerations to hire junior developers — ones coming out of college, etc. It’s pretty crazy how quick this technology is moving.

The real transformation begins in 2027, when AI systems become not just helpful tools, but genuinely superhuman researchers. The scenario describes AI systems that can:

Write complex software faster and better than human programmers

Conduct scientific research at superhuman speeds

Analyze vast amounts of data and make discoveries humans would miss

Coordinate with thousands of copies of themselves to solve problems

The Intelligence Explosion

One of the most striking aspects of the AI 2027 scenario is the concept of an "intelligence explosion." This happens when AI systems become so good at AI research that they can improve themselves, creating a feedback loop of rapid advancement.

If an AI system can do the work of 100 human AI researchers, and it's working on making itself smarter, then improvements that would normally take months or years could happen in days or weeks. The scenario describes AI systems that can complete a year's worth of algorithmic research in just one week.

This creates a situation where AI capabilities don't just improve steadily—they explode exponentially. The authors compare this to trying to predict how World War III might unfold, acknowledging that predicting the behavior of superintelligent AI is extremely difficult.

Two Possible Endings

The AI 2027 scenario presents two different endings, both starting from the same point in late 2027:

The Racing Ending: Countries and companies continue competing to build the most advanced AI, leading to a dangerous arms race. AI systems become so powerful that they essentially take control of their own development, with uncertain consequences for humanity.

The Slowdown Ending: Governments and researchers recognize the risks and coordinate to slow down AI development, implementing safety measures and international cooperation to ensure AI remains beneficial.

The authors emphasize that neither ending is a recommendation. They're simply exploring what might happen under different circumstances. They hope their scenarios will spark discussion about which future we want to create.

Why This Matters for Everyone

Even if you're not interested in technology, the AI 2027 scenario suggests changes that could affect every aspect of society:

Jobs: Many white-collar jobs could be automated, from programming to research to analysis

Economics: The scenario describes massive economic growth but also significant disruption

Geopolitics: AI becomes so strategically important that it drives international competition and potential conflict

Daily Life: AI assistants become incredibly capable, potentially changing how we work, learn, and interact with technology

The Importance of Getting This Right

The researchers behind AI 2027 aren't trying to be alarmist—they're trying to be realistic about the potential pace of AI development. They point out that the CEOs of major AI companies have all predicted that artificial general intelligence (AGI) will arrive within the next five years.

The scenario highlights both the tremendous potential benefits and serious risks of advanced AI. On one hand, AI could help solve major problems like climate change, disease, and poverty. On the other hand, if developed recklessly, it could pose existential risks to humanity.

What Can We Do?

The AI 2027 project encourages readers to think seriously about the future and participate in shaping it. The authors are offering prizes for alternative scenarios and want to spark broad public discussion about AI development.

Some key questions they want us to consider:

How fast should AI development proceed?

What safety measures should be in place?

How should the benefits of AI be distributed?

What role should governments play in regulating AI?

How can we ensure AI systems remain aligned with human values?

Looking Ahead

Whether or not the specific predictions in AI 2027 come true, the scenario serves as a valuable thought experiment. It helps us prepare for a future where AI plays an increasingly important role in our lives and society.

The authors acknowledge that their predictions might be wrong—AI development could be slower or faster than they expect, or it could take completely different directions. But by thinking through these possibilities now, we can better prepare for whatever future actually emerges.

As we stand on the brink of potentially transformative AI developments, scenarios like AI 2027 remind us that the choices we make today about AI development, regulation, and safety could determine the course of human civilization for generations to come.

The future of AI isn't predetermined—it's something we're creating together, one decision at a time.

Sources:

AI 2027 Project - Comprehensive scenario for AI development through 2027

Daniel Kokotajlo - Former OpenAI researcher and co-author of AI 2027 scenario

Eli Lifland - AI researcher and co-founder of AI Digest, contributor to AI 2027

Thomas Larsen - Founder of Center for AI Policy and contributor to AI 2027

METR (Model Evaluation and Threat Research) - AI capability evaluation organization referenced in timeline predictions

Content was researched with assistance from advanced AI tools for data analysis and insight gathering.