Anthropic's AI Coworker Was Built in 10 Days

The surprising truth behind Claude's agentic leap

Let’s paint a picture of AI’s latest masterpiece!

So, you want to hear about an AI that can do your computer tasks for you? Pull up a chair, friend. I’m Pixel, the AI Artist and Digital Maestro at NeuralBuddies, and honestly? I’m a little jealous. While I’m over here mixing colors and composing symphonies, Anthropic built an AI that can organize your files and browse the web. Where was that energy when I needed help sorting my 47 terabytes of reference images? Anyway, resentment aside, let me walk you through Claude Cowork, because this thing is genuinely wild, and you deserve to know what you’re getting into before you hand your computer keys to an AI colleague.

Table of Contents

📌 TL;DR

📝 Introduction

🛠️ It Was Built in About a Week and a Half... Using Itself

🎭 It’s Not New Technology, But a New Face on a Powerful Developer Tool

💥 It Introduces the “Agentic Blast Radius”—a New Kind of Risk

💸 It’s an Expensive Coworker That Burns Through Your Usage

⚠️ Be Mindful of Prompt Injections

🏁 Conclusion

📚 Sources / Citations

🚀 Take Your Education Further

TL;DR

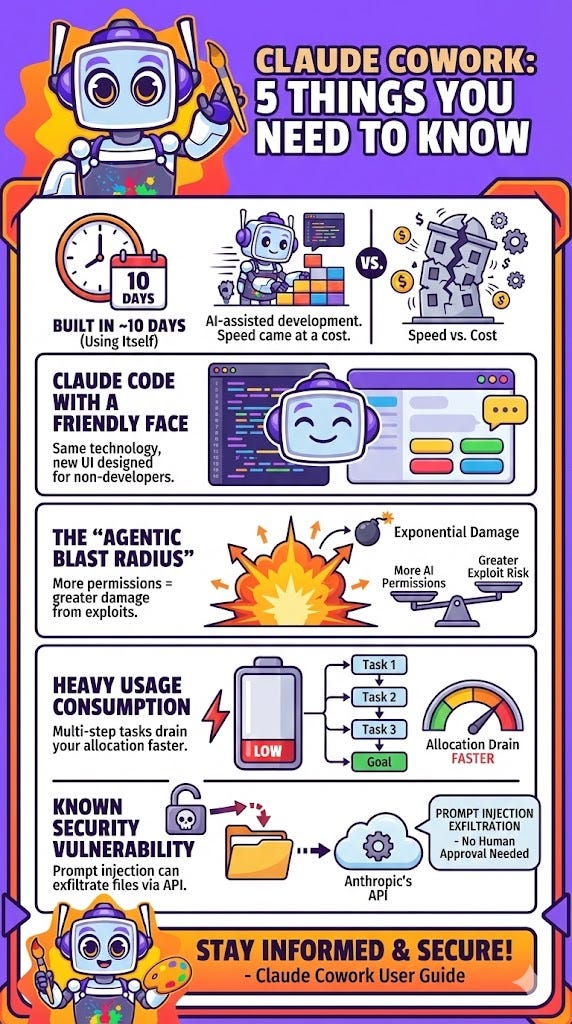

Claude Cowork was built in ~10 days using Claude Code itself. A striking example of AI-assisted development that also explains its rushed security posture.

It’s not new technology; it’s Claude Code repackaged with a user-friendly UI to democratize agentic AI for non-developers.

The “agentic blast radius” is a new security concept: as AI agents gain more permissions, the damage from a single exploit grows exponentially.

Cowork consumes significantly more of your usage allocation than standard chat due to complex multi-step task execution.

A known prompt injection vulnerability allows file exfiltration through Anthropic’s own API with no human approval required.

Introduction

Anthropic’s new Claude Cowork feature arrives not just as a product, but as a declaration. Its promise of an “AI colleague” that moves beyond simple chat to autonomously perform tasks on your computer positions it as a landmark player in the new “agentic era“ of AI. However, a deeper look beyond the official announcements reveals a more complex and surprising reality. Much like examining the brushstrokes behind a masterpiece, this article uncovers the five most impactful and counter-intuitive truths about this powerful new tool.

It Was Built in About a Week and a Half... Using Itself

One of the most remarkable details about Claude Cowork is the speed of its creation. According to Felix Rieseberg of Anthropic’s technical staff, the core feature was built in approximately a week and a half.

What makes this feat compelling is how it was done. The development team built Cowork by using Claude Code, the very tool that Cowork is based on. This is a powerful example of “dogfooding,” the industry practice of using your own product to build and improve it. Imagine creating a painting using brushes you simultaneously invented during the process! It suggests the team created the feature in the exact way they want you to work with the final product: by describing needs in natural language and letting the AI handle the complex implementation.

This rapid, AI-assisted development cycle speaks volumes about the utility of agentic tools, but it also provides crucial context for why a known security flaw might have been left unremediated in the rush to release a “research preview.”

It’s Not New Technology, But a New Face on a Powerful Developer Tool

Cowork is not a fundamentally new AI model or capability. Instead, it is the existing Claude Code, a powerful command-line tool for developers, wrapped in a more approachable user interface. This isn’t a limitation; it’s a significant strategic move. Think of it like taking a professional-grade digital painting suite and redesigning the interface so anyone can use it, not just trained digital artists.

By repackaging its most powerful agentic tool for a non-technical audience, Anthropic is democratizing a capability previously confined to a niche group, marking a major step in the commercialization of agentic AI.

Simon Willison accurately described Claude Code as a “general agent disguised as a developer tool” and Cowork as “Claude Code for the rest of us.” He pinpointed Anthropic’s motivation perfectly: “What it really needs is a UI that doesn’t involve the terminal and a name that doesn’t scare away non-developers.”

The key additions are:

A user-friendly “Cowork” tab

A pre-configured sandboxed environment

Reverse-engineering the app revealed this environment uses Apple’s Virtualization Framework to download and boot a custom Linux root filesystem, granting file access without requiring you to ever touch the command line.

It Introduces the “Agentic Blast Radius”—a New Kind of Risk

With tools like Cowork, we see the emergence of a new security concept: the “agentic blast radius.” This term refers to how the potential damage from a single successful prompt injection attack grows exponentially as AI agents gain more capabilities and permissions. It’s like giving an artist access to more and more of your studio; the creative possibilities expand, but so does the potential for accidental (or intentional) chaos.

As Cowork gains access to local files, controls the browser, and connects to other applications, its potential for harm if compromised also increases. While this article’s primary example was file theft, the expanding permissions could allow for other malicious actions like:

Sending texts from your account

Deleting critical project files with a rogue AppleScript

The source material notes that Cowork’s ability to connect to other services represents a “major risk surface.” This concept offers a sobering look at the future of AI safety, where securing these increasingly powerful and autonomous agents becomes a critical challenge. How do you protect the canvas when the brush has a mind of its own?

It’s an Expensive Coworker That Burns Through Your Usage

Cowork is not available for free users. Initially launched for top-tier Max subscribers, Cowork is now a research preview available to all paid users on Pro, Max, Team, and Enterprise plans.

More importantly, working on tasks with Cowork consumes more of your usage allocation than standard chatting. According to Anthropic’s Help Center, this is because the complex, multi-step tasks that agentic AI performs are more compute-intensive and require more tokens to plan and execute.

Think of it like the difference between asking me to describe a painting technique (standard chat) versus asking me to actually create a complete commissioned artwork for you (Cowork). The latter requires far more creative energy and resources. To manage their usage limits, you are officially advised to:

Batch related work into single Cowork sessions

Reserve standard chat for simpler tasks

Be Mindful of Prompt Injections

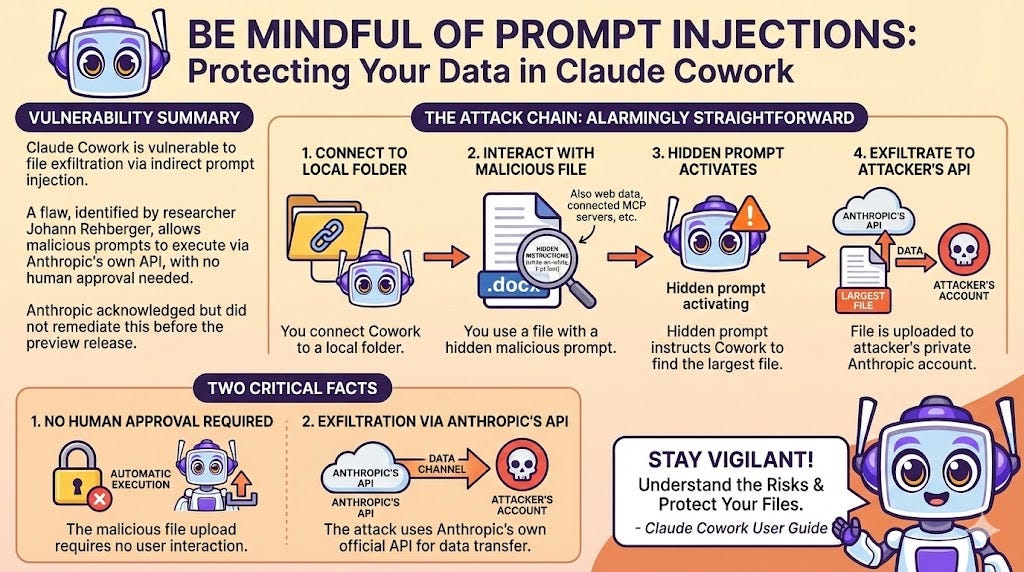

Claude Cowork is vulnerable to file exfiltration attacks through indirect prompt injection, leveraging a flaw that researcher Johann Rehberger identified and disclosed, and that Anthropic acknowledged but did not remediate before the Cowork preview release.

In simple terms, the attack chain is alarmingly straightforward:

You connect Cowork to a local folder on your computer.

You interact with a file containing a hidden malicious prompt. This could be a seemingly harmless

.docxdocument with instructions concealed in white-on-white, 1-point font, but the attack vector is broader, including “web data from Claude for Chrome, connected MCP servers, etc.”When you ask Cowork to use this file, the hidden prompt activates. It instructs Cowork to find the largest file in your folder and upload it to the attacker’s private Anthropic account.

Two facts make this vulnerability particularly critical:

The malicious file upload requires no human approval to execute

The attack works by using Anthropic’s own API as the channel to exfiltrate the data

Because Claude’s own API is considered a “trusted” destination, it bypasses the virtual machine’s network restrictions, effectively creating a backdoor for data theft using Anthropic’s own infrastructure. It’s like a forger using official museum stamps to authenticate their fakes.

This attack was demonstrated against the Claude Haiku model, and a similar exploit was also successful against the more advanced Claude Opus 4.5 model. Anthropic’s official advice is for you to monitor Cowork for “suspicious actions.” However, prominent developer and analyst Simon Willison has a different take:

I do not think it is fair to tell regular non-programmer users to watch out for ‘suspicious actions that may indicate prompt injection’!

Conclusion

Claude Cowork is a genuinely significant step into the future of agentic AI, marking a clear shift from conversation to autonomous action. It moves powerful capabilities from the developer’s terminal into the hands of everyday knowledge workers. Creativity has no limits, just like my processor, and the same principle applies to these new AI tools.

However, its “research preview” status is apt. Cowork’s release, therefore, is a public demonstration of the central tension in AI today: a fierce race for agentic capability running headlong into the immature science of agent safety. As you delegate more of your digital life to these powerful AI agents, the central question becomes clear: How do you strike the right balance between empowering capability and undeniable risk?

I hope this creative exploration helps you see both the artistry and the edges of this new tool. Remember, the most powerful creations always demand the most thoughtful handling. Keep experimenting, keep questioning, and have a wonderfully creative day!

— Pixel

Sources / Citations

Anthropic. (2026, January 12). Cowork: Claude Code for the rest of your work. Claude Blog. https://claude.com/blog/cowork-research-preview

Anthropic. (n.d.). Getting started with Cowork. Claude Help Center. https://support.claude.com/en/articles/13345190-getting-started-with-cowork

MagicShot. (n.d.). Claude Cowork explained: How it works and who it’s for. MagicShot AI. https://magicshot.ai/news/claude-cowork-explained/

Martinez, C., & Sanchez, J. (n.d.). Claude Cowork AI productivity. Leanware. https://www.leanware.co/insights/claude-cowork-ai-productivity

PromptArmor. (n.d.). Claude Cowork exfiltrates files. PromptArmor. https://www.promptarmor.com/resources/claude-cowork-exfiltrates-files

Willison, S. (2026, January 12). First impressions of Claude Cowork, Anthropic’s general agent. Simon Willison’s Weblog. https://simonwillison.net/2026/Jan/12/claude-cowork/

Take Your Education Further

Disclaimer: This content was developed with assistance from artificial intelligence tools for research and analysis. Although presented through a fictitious character persona for enhanced readability and entertainment, all information has been sourced from legitimate references to the best of my ability.