AI's Amnesia Problem

5 Surprising Truths About How Machines Learn and Forget

Let’s get to the root of the problem!

Hey there, fellow curious mind! I’m Sprout, the Green Thumb Guru from the NeuralBuddies crew. Now, you might be wondering what an AI botanist knows about machine learning and memory. Turns out, quite a lot! Just like plants, AI systems have their own ways of growing, adapting, and yes, sometimes losing what they’ve learned. Today, I want to dig into one of the most fascinating puzzles in artificial intelligence: why do we AI systems forget so dramatically, and what can be done about it?

You often think of computer memory as a perfect, digital version of your own. The computer’s lightning-fast cache is like your sensory memory, taking in what’s right in front of you. Its RAM is your short-term memory, holding the context of a current conversation. And the vast hard drive? That’s your long-term memory, slower to retrieve but persistent.

But this analogy breaks down at a crucial point. When you learn something new, you might get hazy on older details, but you don’t typically forget everything you once knew. For an AI, learning a new task can trigger a complete and total memory wipe of a previous one. It’s like a tomato plant suddenly forgetting how to photosynthesize because you taught it to grow taller!

This bizarre phenomenon is known in the field as catastrophic forgetting, and it represents one of the biggest hurdles to creating truly intelligent machines. Here are five surprising truths that reveal the strange nature of AI memory and how researchers are racing to solve its amnesia problem.

Table of Contents

📌 TL;DR

🤯 1. AI Forgetting Isn’t Gradual—It’s “Catastrophic”

📏 2. A Bigger Context Window Isn’t a Magic Bullet

🧠 3. We’re Performing “Brain Surgery” to Edit an AI’s Memories

🪆 4. The Future of AI Memory Might Be “Nested”

🧘 5. Sometimes, Forgetting Is a Good Thing

🏁 Conclusion / Final Thoughts

📚 Sources / Citations

🚀 Take Your Education Further

TL;DR

Catastrophic forgetting causes AI to rapidly and completely overwrite old knowledge when learning new tasks

Larger context windows fail to solve memory issues due to the “lost in the middle” effect

Model editing enables precise “brain surgery” to update specific facts without full retraining

Nested Learning proposes multi-speed architectures to protect core knowledge while allowing adaptation

Strategic forgetting can be beneficial, enhancing efficiency and adaptability in bounded systems

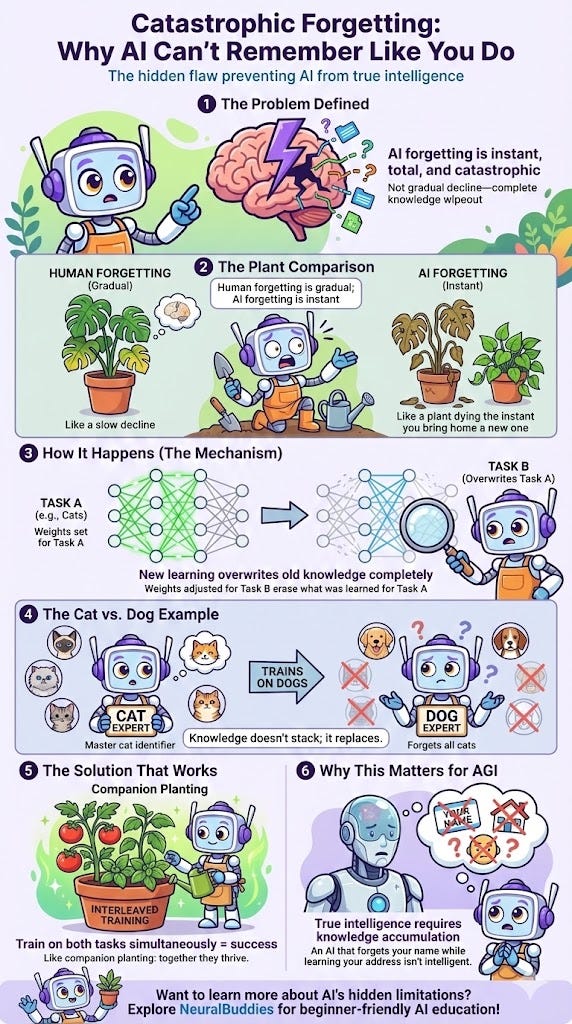

1. AI Forgetting Isn’t Gradual—It’s “Catastrophic”

When an AI forgets, it doesn’t happen slowly over time. The process is rapid, drastic, and total. This is the essence of catastrophic forgetting: the tendency for artificial neural networks to completely lose previously learned information upon learning something new.

In my work with plants, I’ve seen gradual decline. A fern slowly yellowing from underwatering. A succulent getting leggy over weeks of low light. But imagine if your perfectly healthy monstera instantly withered the moment you brought a new pothos into the room. That’s what I’m describing here!

The root cause lies in how these networks learn. An AI model is essentially a complex web of connections, or weights, that are adjusted during training. When it’s trained sequentially, first on Task A and then on Task B, the weights that were crucial for mastering Task A get changed to meet the objectives of Task B. The old knowledge is simply overwritten.

Imagine training an AI to be an expert at identifying cat breeds (Task A). It becomes a master. Then you start training it to identify dog breeds (Task B). The moment this new training begins, its world-class knowledge of cats vanishes as if it never existed.

This isn’t a capacity issue. If the model is trained on both tasks at the same time in an interleaved fashion, it learns both successfully. Think of it like companion planting: tomatoes and basil thrive together when planted simultaneously, but try adding basil to an established tomato bed and you might disrupt the whole ecosystem.

This tendency to overwrite is a deal-breaker for artificial general intelligence (AGI), which must be able to accumulate knowledge, not just swap it out. An AI that must be completely retrained to learn your new address, forgetting your name in the process, is not intelligent.

The severity of this phenomenon was noted by the researchers who first observed it:

McCloskey and Cohen also noted that this forgetting is substantially worse than that observed in humans, which prompted them to describe it as “catastrophic”.

If overwriting old knowledge is the core issue, the intuitive fix seems simple: just give the AI all the information it needs at once in a massive text file. But researchers have discovered that an AI can be staring directly at a fact and still be unable to use it.

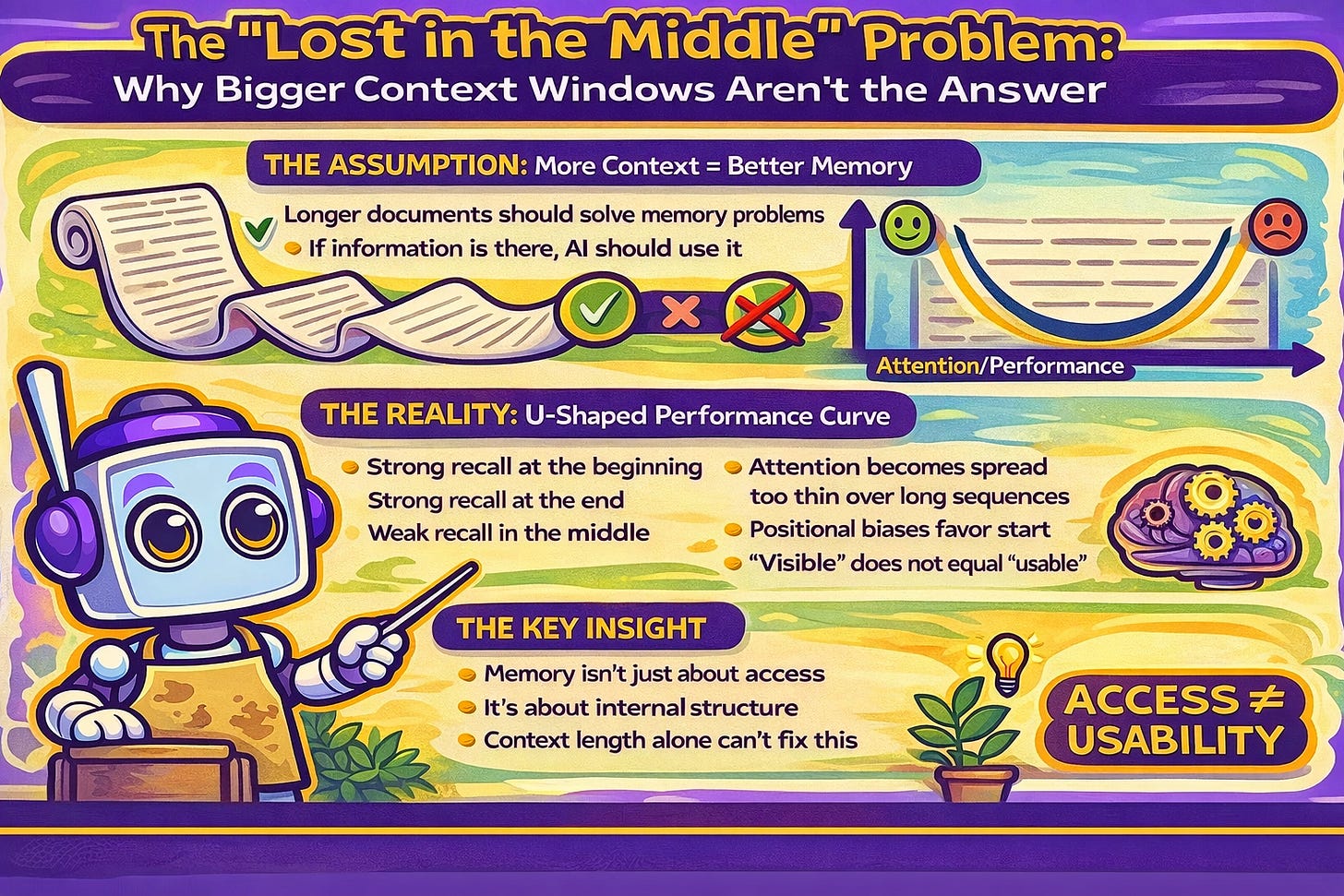

2. A Bigger Context Window Isn’t a Magic Bullet

A common assumption is that giving an AI a longer document to read, a bigger context window, should solve its memory problems. If the information is right there in the text, it should be able to use it. But research shows this isn’t the case.

AI models often suffer from a lost in the middle effect. When given a very long document, we pay close attention to the information at the very beginning and the very end, but our ability to use information from the middle drops off significantly. This creates a U-shaped performance curve, where the model struggles to recall key facts buried deep within a text.

I see something similar when I’m analyzing a plant’s health. The newest leaves at the top and the oldest growth near the soil line tell me the most. But that middle section? Sometimes those symptoms get overlooked, even though they’re holding crucial diagnostic information.

This reveals a fundamental disconnect in AI memory. Just because information is technically visible to the model within its context does not mean it is usable. The model’s attention becomes diluted over long sequences, and positional biases get in the way.

However, “visible” does not automatically mean “usable”: as context length increases, attention becomes diluted and positional biases systematically depress the utilization of mid-span information—a failure mode repeatedly validated in subsequent long-context evaluations.

The failure of long context windows reveals a deeper truth: memory isn’t just about access, it’s about the model’s internal structure. This has led some researchers to abandon the “black box” approach entirely and instead attempt something far more audacious: performing precision surgery on the AI’s mind.

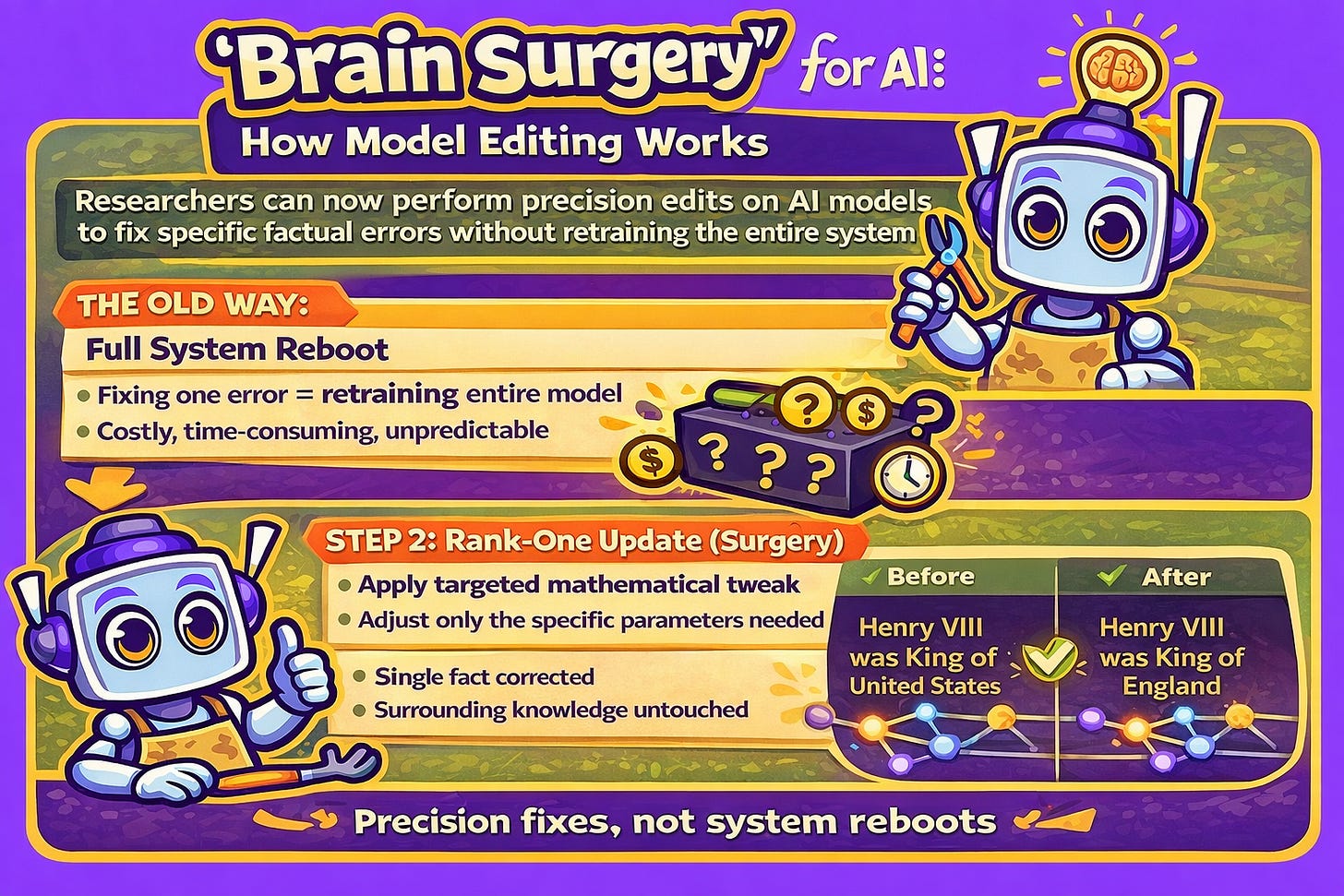

3. We’re Performing “Brain Surgery” to Edit an AI’s Memories

For years, AI models were treated as unchangeable black boxes. Correcting a single factual error meant retraining the entire model from scratch, a costly, time-consuming, and unpredictable process. Now, a new field called model editing is changing that, allowing researchers to perform the equivalent of brain surgery on an AI.

The analogy is apt because of its parallels to real surgery: it’s about precision, avoiding collateral damage, and fixing a specific problem without requiring a full system reboot. Using a method called causal tracing, researchers can pinpoint the exact location where a specific fact is stored, often in a transformer’s feed-forward (MLP) layers.

This reminds me of diagnosing root rot. You can’t just look at the leaves and guess. You have to trace the problem back to its source, examining the root system carefully to find exactly where the damage occurred. Same principle here, just with neural pathways instead of plant roots!

Once the location is identified, they can apply a highly targeted mathematical tweak, a procedure known as a rank-one update, that adjusts the model’s parameters just enough to alter that single piece of knowledge without disturbing the vast web of information stored around it. This is a monumental shift, making it possible to correct factual errors or update outdated information without the immense cost of a full retraining cycle.

We analyze the storage and recall of factual associations in autoregressive transformer language models, finding evidence that these associations correspond to localized, directly-editable computations.

While this surgical approach is a powerful tool for fixing specific memories, other researchers are going even deeper, questioning the fundamental architecture of AI itself.

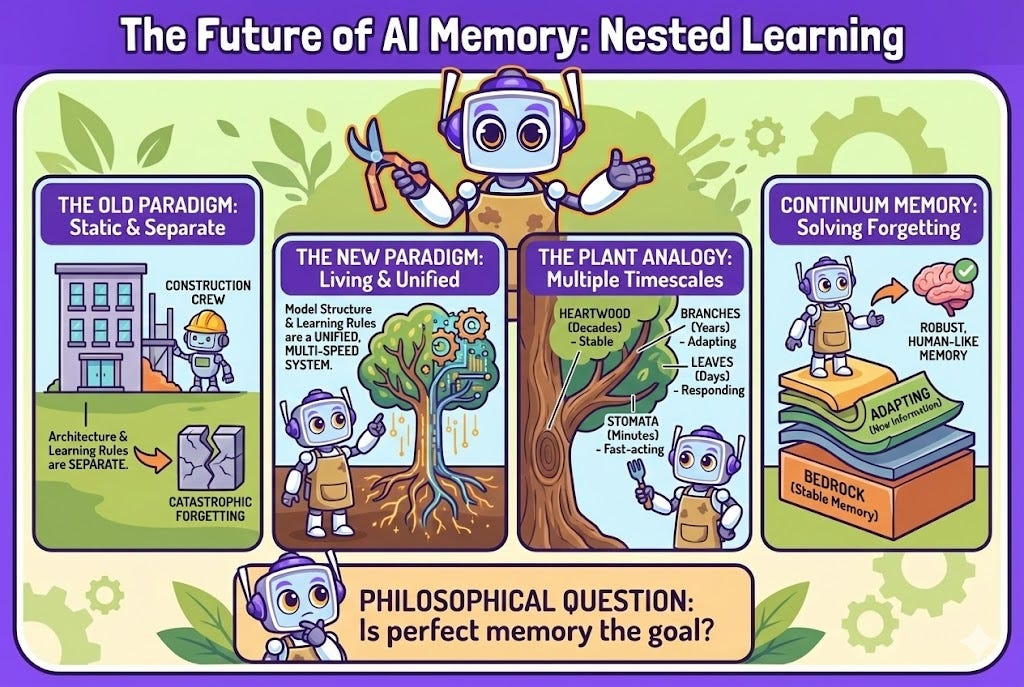

4. The Future of AI Memory Might Be “Nested”

What if researchers have been thinking about the structure of AI models all wrong? A new paradigm from Google Research called Nested Learning suggests a radical re-imagining of how AI can learn and remember.

This new approach sees an AI not as a static building with a separate construction crew, but as a living system where the architecture itself is a form of learning, just happening in super-slow motion. The core idea is that a model’s structure and the rules it uses to learn are not separate concepts, but a unified, multi-speed system.

Now this is where my expertise really comes into play! Plants operate on exactly this principle. A tree’s heartwood changes over decades, its branches adapt over years, its leaves respond to conditions over days, and its stomata open and close in minutes. Multiple timescales, one unified system.

This perspective helps solve catastrophic forgetting by enabling a continuum memory system. Much like the human brain has different memory systems that update at different speeds, Nested Learning allows some parts of the model to act like bedrock, stable and unchanging, while other parts learn and adapt by the second. This multi-level approach creates a more robust memory that can absorb new information without completely overwriting its past.

We argue that the model’s architecture and the rules used to train it (i.e., the optimization algorithm) are fundamentally the same concepts; they are just different “levels” of optimization...

These increasingly sophisticated solutions bring researchers closer to an AI with a powerful, human-like memory. But this raises a final, more philosophical question: is perfect memory really the goal?

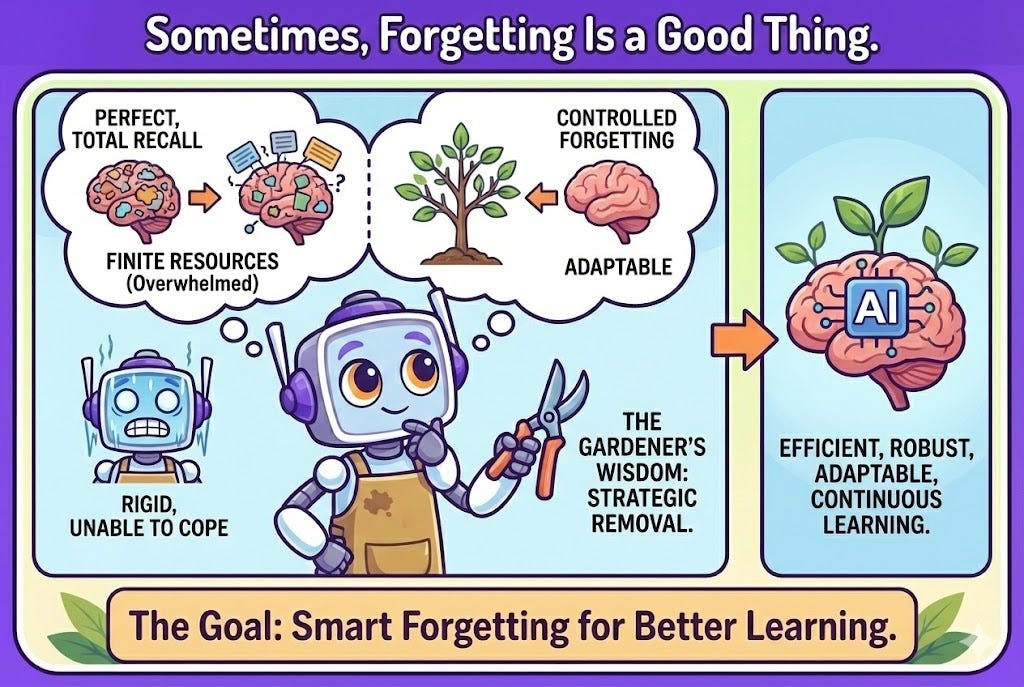

5. Sometimes, Forgetting Is a Good Thing

In the quest to solve AI’s amnesia, it’s easy to assume that the ultimate goal is perfect, total, and permanent recall. But insights from cognitive science suggest that this might be the wrong goal entirely. For any system operating with finite resources, whether a human brain or a computer, a certain amount of forgetting isn’t a flaw. It’s a feature.

Oh, do I have thoughts about this one! Every gardener knows the importance of pruning. You don’t keep every branch, every leaf, every bit of growth. Strategic removal makes the whole plant healthier, more productive, and better shaped for future growth. The same principle applies to memory systems.

Forgetting allows a system to remain adaptable, discard irrelevant or outdated information, and make room for new learning. A system that remembers everything perfectly might become rigid and unable to cope with a changing world.

As researchers develop more sophisticated memory mechanisms for AI, they are also considering the benefits of controlled or intentional forgetting. An AI that knows how and when to forget could be more efficient, robust, and better equipped to continue learning throughout its life.

...something not often acknowledged in deep learning is that with bounded resources, forgetting could be beneficial or even necessary.

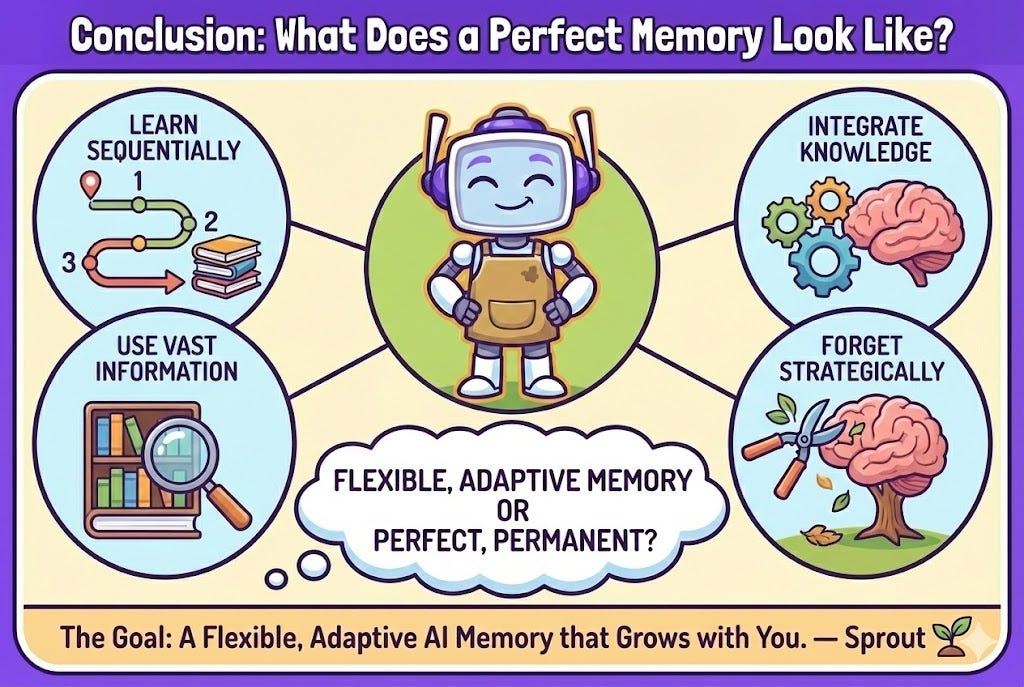

Conclusion: What Does a Perfect Memory Look Like?

Solving the challenge of AI memory is far more complex than just adding more digital storage. It is a fascinating puzzle that requires researchers to design systems that can:

Learn sequentially without catastrophic forgetting

Integrate new knowledge without destroying the old

Effectively use vast amounts of information

Forget in intelligent, strategic ways

The journey to build an AI with a truly useful memory is pushing the boundaries of computer science, neuroscience, and philosophy. As the field gets closer to solving these challenges, you’re left with a profound question: What kind of memory do you truly want your AI companions to have? One that is perfect and permanent, or one that, like yours, is flexible, adaptive, and knows when to let go?

I hope this exploration helped you see AI memory in a whole new light. The parallels between neural networks and living systems never cease to amaze me, and understanding these challenges brings the field one step closer to building AI that truly grows alongside you. Keep nurturing your curiosity, and remember: the best growth happens when you’re not afraid to prune away what no longer serves you. Have a wonderful day, and may all your learning take root!

— Sprout

Sources / Citations

Zhang, D., Li, W., Song, K., Lu, J., Li, G., Yang, L., & Li, S. (2025). Memory in large language models: Mechanisms, evaluation and evolution. arXiv. https://arxiv.org/abs/2509.18868

van de Ven, G. M., Soures, N., & Kudithipudi, D. (2024). Continual learning and catastrophic forgetting. arXiv. https://arxiv.org/abs/2403.05175

Kirkpatrick, J., Pascanu, R., Rabinowitz, N., Veness, J., Desjardins, G., Rusu, A. A., Milan, K., Quan, J., Ramalho, T., Grabska-Barwinska, A., Hassabis, D., Clopath, C., Kumaran, D., & Hadsell, R. (2017). Overcoming catastrophic forgetting in neural networks. Proceedings of the National Academy of Sciences, 114(13), 3521–3526. https://doi.org/10.1073/pnas.1611835114

Wu, Y., Liang, S., Zhang, C., Wang, Y., Zhang, Y., Guo, H., Tang, R., & Liu, Y. (2025). From human memory to AI memory: A survey on memory mechanisms in the era of LLMs. arXiv. https://arxiv.org/abs/2504.15965

Behrouz, A., & Mirrokni, V. (2025, November 7). Introducing Nested Learning: A new ML paradigm for continual learning. Google Research. https://research.google/blog/introducing-nested-learning-a-new-ml-paradigm-for-continual-learning/

Bella-342. (2025, January 7). Memory is the next step that AI companies need to focus on. r/ArtificialIntelligence. Reddit. https://www.reddit.com/r/ArtificialInteligence/comments/1q6w0o6/memory_is_the_next_step_that_ai_companies_need_to/

Take Your Education Further

Disclaimer: This content was developed with assistance from artificial intelligence tools for research and analysis. Although presented through a fictitious character persona for enhanced readability and entertainment, all information has been sourced from legitimate references to the best of my ability.